The head of Jon Peddie Research, a leading graphics market analysis firm that has been around for nearly 40 years, suggests that Intel might axe its Accelerated Computing Systems and Graphics Group (AXG). The division has been bleeding money for years and has failed to deliver a competitive product for any market segment that it serves. Forget the best graphics cards; Intel just needs to ship fully functional GPUs.

$3.5 Billion Lost

Jon Peddie estimates that Intel’s has invested about $3.5 billion in its discrete GPU development and that these investments yet have to pay off. In fact, Intel’s AXG has officially lost $2.1 billion since its formal establishment in Q1 2021. Given the track record of Pat Gelsinger, Intel’s chief executive who scrapped six businesses since early 2021, JPR suggests that AXG might be next.

“Gelsinger is not afraid to make tough decisions and kill pet projects if they don’t produce — even projects he may personally like,” Peddie wrote in a blog post. “[…] The rumor mill has been hinting that the party is over and that AXG would be the next group to be jettisoned. That rumor was denied by Koduri.”

When Intel disclosed its plans to develop discrete graphics solutions in 2017, it announced plans to address computing, graphics, media, imaging, and machine intelligence capabilities for client and datacenter applications with its GPUs. As an added bonus, the Core and Visual Computing Group was meant to address emerging edge computing market.

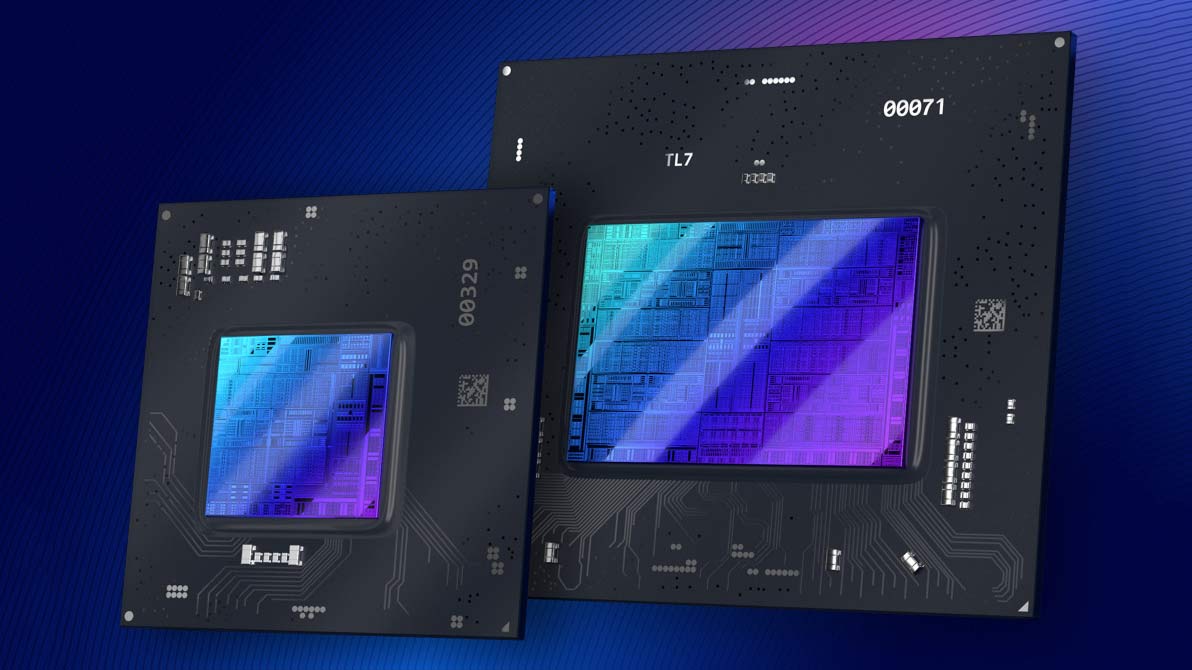

Five years into its discrete GPU journey, the company has released two low-end standalone GPUs addressing cheap PCs and some datacenter applications; launched its low-power graphics architecture for integrated GPUs; delivered oneAPI that could be used to program CPUs, GPUs, FPGAs, and other compute units; cancelled its Xe-HP GPU architecture for datacenter GPUs; postponed (multiple times) shipments of its Ponte Vecchio compute GPU for AI and HPC applications (the most recent was partly due to the late arrival of the Intel 4 node), and delayed the launch of an Xe-HPG ACM-G11 gaming GPU by about a year.

Considering how late to market Intel’s Arc Alchemist 500 and 700-series GPUs are already and the fact that they will have to compete against AMD’s and Nvidia’s next-generation Radeon RX 7000 and GeForce RTX 40-series products, it is highly likely that they will fail. This will obviously increase Intel’s losses.

To Axe or Not to Axe

Given Intel’s AXG track record, Intel spent $3.5 billion and without any tangible success so far, Jon Peddie asserts. For Intel, discrete GPUs is a completely new market that requires heavy investments, so the losses are not surprising. Meanwhile, Intel’s own Habana Gaudi2 deep learning processor shows rather tangible performance advantages over Nvidia’s A100 in AI workloads, a market for Intel’s Ponte Vecchio. This success might tip the scales toward axing AXG.

“It is a 50–50 guess whether Intel will wind things down and get out,” said Peddie. “If they don’t, the company is facing years of losses as it tries to punch its way into an unfriendly and unforgiving market.”

Strategic Importance of GPUs

But while it might make sense for Intel to discharge its AXG group and cancel discrete GPU development to cut down losses, it should be noted that Intel pursues several strategically important directions with its AXG division in general and discrete GPU development in particular. The list of development directions includes the following:

- AI/DL/ML applications

- HPC applications

- Competitive GPU architecture and IP to address client discrete and integrated GPUs as well as custom solutions offered by IFS

- Datacenter GPUs for rendering and video encoding

- Edge computing applications with discrete or integrated GPUs

- Hybrid processing units for AI/ML and HPC applications

While discrete GPU development per se has generated only losses for Intel so far (we would still wonder how much money the Xe-LP iGPU architecture has earned for Intel after two years on the market), it should be noted that without a competitive GPU-like architecture that could serve everything from a low-end laptop to a supercomputer, Intel will not be able to address many new growth opportunities.

Habana Gaudi2 looks to be a competitive DL solution, but it cannot be used for supercomputing applications. Moreover, without further evolution of Intel’s Xe-HPC datacenter GPU architecture, the company will not be able to build hybrid processing units for AI/ML and HPC applications (e.g., Falcon Shores). Without such XPUs, Intel’s ZettaFLOPS by 2027 plan starts to look increasingly unrealistic.

While Intel’s discrete GPU endeavor has not lived up to expectations, Intel needs an explicitly parallel compute architecture for loads of upcoming applications. GPUs have proven to be the best architecture for highly parallel workloads, no matter whether they require low compute precision like AI/DL/ML applications or full FP64 precision like supercomputing applications.

If Intel pulls the plug on standalone GPU development, it will have to completely redesign its roadmap both in terms of products and in terms of architectures. For example, it will have to find a provider of a competitive GPU architecture for its client processors, as a small in-house iGPU development team within Intel will hardly be able to deliver an integrated graphics solution that would be competitive against those offered by AMD and Apple for their client system-on-chips (SoCs).

Summary

Intel’s discrete GPU endeavor may have already cost Intel about $3.5 billion, has not brought any fruits so far, and will likely generate further losses. Killing the AXG division seems like an increasingly attractive management decision. However, GPUs and derivative hybrid architectures are strategically important for many markets Intel serves and applications that it will have to serve in the following years, so discharging the AXG group seems counterproductive. A lot likely hinges on Intel’s graphics driver woes, but fixing the drivers isn’t a quick solution.

What will Pat Gelsinger do? Perhaps we will find it out rather sooner than later. “Perhaps the clouds will lift by the end of this quarter,” muses Jon Peddie.