Large companies like Google have been building their own servers for many years now in a bid to get machines that suit their needs the best way possible. Most of these servers run Intel’s Xeon processors with or without customizations, but feature additional hardware that accelerate certain workloads. For Google, this approach is no longer good enough. This week the company announced that it had hired Intel veteran Uri Frank to lead a newly established division that will develop custom system-on-chips (SoC) for the company’s datacenters.

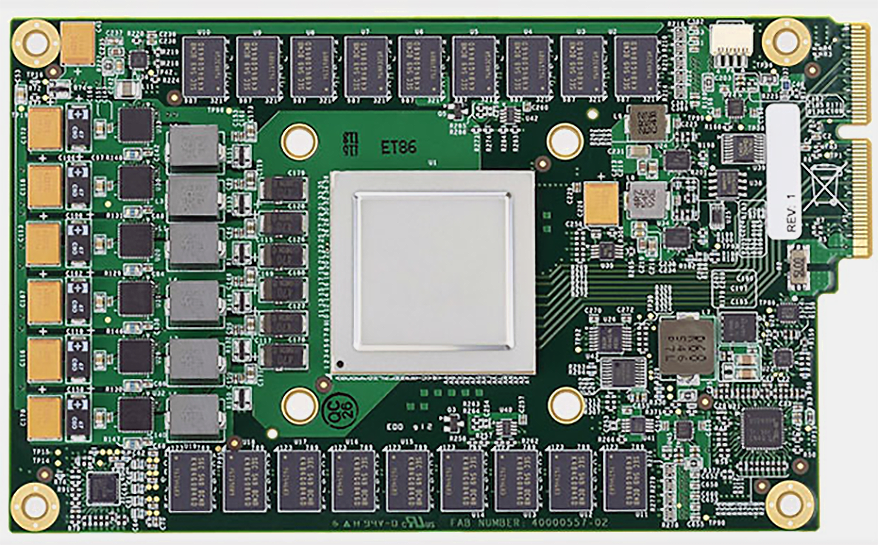

Google is not a newbie when it comes to hardware development. The company introduced its own Tensor Processing Unit (TPU) back in 2015 and today it powers various services, including real-time voice search, photo object recognition, and interactive language translation. In 2018, the company unveiled its video processing units (VPUs) to broaden the number of formats it can distribute videos in. In 2019, it followed with OpenTitan, the first open-source silicon root-of-trust project. Now Google installs its own and third-party hardware onto the motherboards next to an Intel Xeon processor. Going forward, the company wants to pack as many capabilities as it can into SoCs to improve performance, reduce latencies, and reduce the power consumption of its machines.

“To date, the motherboard has been our integration point, where we compose CPUs, networking, storage devices, custom accelerators, memory, all from different vendors, into an optimized system,” Amin Vahdat, Google Fellow and Vice President of Systems Infrastructure, wrote in a blog post. “Instead of integrating components on a motherboard where they are separated by inches of wires, we are turning to SoC designs where multiple functions sit on the same chip, or on multiple chips inside one package.”

These highly integrated system-on-chips (SoCs) and system-in-packages (SiPs) for datacenters will be developed in a new development center in Israel, which will be headed by Uri Frank, vice president of engineering for server chip design at Google, who brings 24 years of custom CPU design and delivery experience to the company. The cloud giant plans to recruit several hundred world-class SoC engineers to design its SoCs and SiPs, so these products are not going to jump into Google’s servers in 2022, but will likely reach datacenters by the middle of the decade.

Google has a vision of tightly integrated SoCs replacing relatively disintegrated motherboards. The company is eager to develop building blocks of its SoCs and SiPs, but will have nothing against buying them from third party if needed.

“Just like on a motherboard, individual functional units (such as CPUs, TPUs, video transcoding, encryption, compression, remote communication, secure data summarization, and more) come from different sources,” said Vahdat. “We buy where it makes sense, build it ourselves where we have to, and aim to build ecosystems that benefit the entire industry.”

Google’s foray into datacenter SoCs is consistent with what its rivals Amazon Web Services and Microsoft Azure are doing. AWS already offers instances powered by its own Arm-powered Graviton processors, whereas Microsoft is reportedly developing its own datacenter chip too. Google yet has to disclose whether it intends to build its own CPU cores or license them from Arm or another party, but since the company is early in its journey, it is probably considering different options at this point.

“I am excited to share that I have joined Google Cloud to lead infrastructure silicon design,” Uri Frank wrote in a blog post. “Google has designed and built some of the world’s largest and most efficient computing systems. For a long time, custom chips have been an important part of this strategy. I look forward to growing a team here in Israel while accelerating Google Cloud’s innovations in compute infrastructure. Want to join me? If you are a world class SOC designer, open roles will be posted to careers.google.com soon.”