Google is using AI to design its next generation of AI chips more quickly than humans can

Source: The Verge added 10th Jun 2021Google is using machine learning to help design its next generation of machine learning chips. The algorithm’s designs are “comparable or superior” to those created by humans, say Google’s engineers, but can be generated much, much faster. According to the tech giant, work that takes months for humans can be accomplished by AI in under six hours.

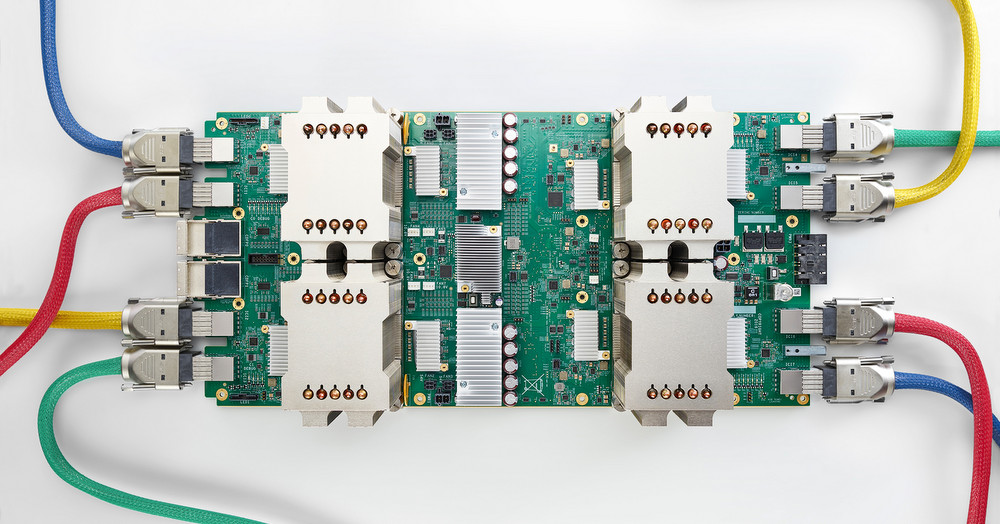

Google has been working on how to use machine learning to create chips for years, but this recent effort — described this week in a paper in the journal Nature — seems to be the first time its research has been applied to a commercial product: an upcoming version of Google’s own TPU (tensor processing unit) chips, which are optimized for AI computation.

“Our method has been used in production to design the next generation of Google TPU,” write the authors of the paper, led by Google’s head of ML for Systems, Azalia Mirhoseini.

AI, in other words, is helping accelerate the future of AI development.

In the paper, Google’s engineers note that this work has “major implications” for the chip industry. It should allow companies to more quickly explore the possible architecture space for upcoming designs and more easily customize chips for specific workloads.

An editorial in Nature calls the research an “important achievement,” and notes that such work could help offset the forecasted end of Moore’s Law — an axiom of chip design from the 1970s that states that the number of transistors on a chip doubles every two years. AI won’t necessarily solve the physical challenges of squeezing more and more transistors onto chips, but it could help find other paths to increasing performance at the same rate.

The specific task that Google’s algorithms tackled is known as “floorplanning.” This usually requires human designers who work with the aid of computer tools to find the optimal layout on a silicon die for a chip’s sub-systems. These components include things like CPUs, GPUs, and memory cores, which are connected together using tens of kilometers of minuscule wiring. Deciding where to place each component on a die affects the eventual speed and efficiency of the chip. And, given both the scale of chip manufacture and computational cycles, nanometer-changes in placement can end up having huge effects.

Google’s engineers note that designing floor plans takes “months of intense effort” for humans, but, from a machine learning perspective, there is a familiar way to tackle this problem: as a game.

AI has proven time and time again it can outperform humans at board games like chess and Go, and Google’s engineers note that floorplanning is analogous to such challenges. Instead of a game board, you have a silicon die. Instead of pieces like knights and rooks, you have components like CPUs and GPUs. The task, then, is to simply find each board’s “win conditions.” In chess that might be checkmate, in chip design it’s computational efficiency.

Google’s engineers trained a reinforcement learning algorithm on a dataset of 10,000 chip floor plans of varying quality, some of which had been randomly generated. Each design was tagged with a specific “reward” function based on its success across different metrics like the length of wire required and power usage. The algorithm then used this data to distinguish between good and bad floor plans and generate its own designs in turn.

As we’ve seen when AI systems take on humans at board games, machines don’t necessarily think like humans and often arrive at unexpected solutions to familiar problems. When DeepMind’s AlphaGo played human champion Lee Sedol at Go, this dynamic led to the infamous “move 37” — a seemingly illogical piece placement by the AI that nevertheless led to victory.

Nothing quite so dramatic happened with Google’s chip-designing algorithm, but its floor plans nevertheless look quite different to those created by a human. Instead of neat rows of components laid out on the die, sub-systems look like they’ve almost been scattered across the silicon at random. An illustration from Nature shows the difference, with the human design on the left and machine learning design on the right. You can also see the general difference in the image below from Google’s paper (orderly humans on the left; jumbled AI on the right), though the layout has been blurred as it’s confidential:

This paper is noteworthy, particularly because its research is now being used commercially by Google. But it’s far from the only aspect of AI-assisted chip design. Google itself has explored using AI in other parts of the process like “architecture exploration,” and rivals like Nvidia are looking into other methods to speed up the workflow. The virtuous cycle of AI designing chips for AI looks like it’s only just getting started.

brands: AT Random Champion First Google It Lee ML NVIDIA other Recent Silicon Simply Solutions Space Superior Tpu Unit WAS media: 'The Verge' keywords: Cloud Games Google Memory

Related posts

Notice: Undefined variable: all_related in /var/www/vhosts/rondea.com/httpdocs/wp-content/themes/rondea-2-0/single-article.php on line 88

Notice: Undefined variable: all_related in /var/www/vhosts/rondea.com/httpdocs/wp-content/themes/rondea-2-0/single-article.php on line 88

Related Products

Notice: Undefined variable: all_related in /var/www/vhosts/rondea.com/httpdocs/wp-content/themes/rondea-2-0/single-article.php on line 91

Warning: Invalid argument supplied for foreach() in /var/www/vhosts/rondea.com/httpdocs/wp-content/themes/rondea-2-0/single-article.php on line 91