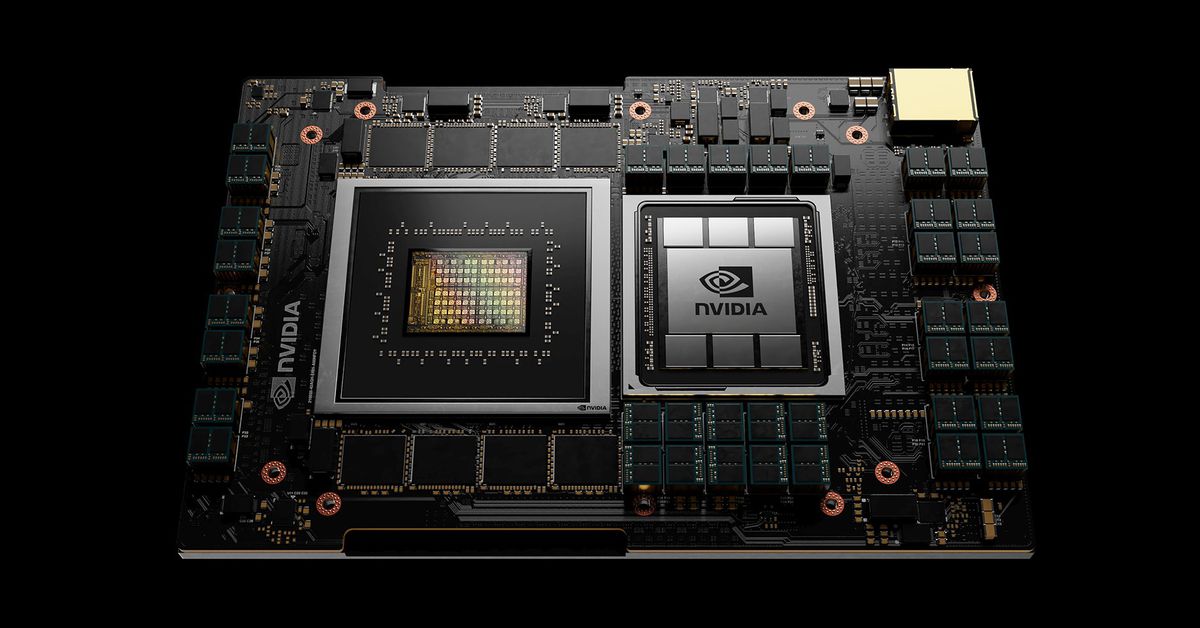

Nvidia is building new Arm CPUs again: Nvidia Grace, for the data center

Source: The Verge added 12th Apr 2021We’ve barely heard a peep out of Nvidia on the CPU front for years, after the lackluster arrival of its Project Denver CPU and its associated Tegra K1 mobile processors in 2014. But now, the company’s getting back into CPUs in a big way with the new Nvidia Grace, an Arm-based processing chip specifically designed for AI data centers.

It’s a good time for Nvidia to be flexing its Arm: it’s currently trying to buy Arm itself for $40 billion, pitching it specifically as an attempt “to create the world’s premier computing company for the age of AI,” and this chip might be the first proof point. Arm is having a moment in the consumer computing space as well, where Apple’s M1 chips recently upended our concept of laptop performance. It’s also more competition for Intel, of course, whose shares dipped after the Nvidia announcement.

The new Grace is named after computing pioneer Grace Hopper, and it’s coming in 2023 to bring “10x the performance of today’s fastest servers on the most complex AI and high performance computing workloads,” according to Nvidia. That will make it attractive to research firms building supercomputers, of course, which the Swiss National Supercomputing Centre (CSCS) and Los Alamos National Laboratory are already signed up to build in 2023 as well.

A Grace Next is already on the roadmap for 2025, too. Here’s a slide from Nvidia’s GTC 2021 presentation where it announced the news:

I’d recommend reading what our friends at AnandTech have to say about where Grace might fit into the data center market and Nvidia’s ambitions. It’s worth noting that Nvidia isn’t releasing much in the way of specs just yet — but Nvidia does say it features a fourth-gen NVLink with a record 900 GB/s interconnect between the CPU and GPU. “Critically, this is greater than the memory bandwidth of the CPU, which means that NVIDIA’s GPUs will have a cache coherent link to the CPU that can access the system memory at full bandwidth, and also allowing the entire system to have a single shared memory address space,” writes AnandTech.

brands: Apple Denver First Intel It Mobile New NVIDIA Pioneer Premier Space media: 'The Verge' keywords: Apple laptop Memory Mobile

Related posts

Notice: Undefined variable: all_related in /var/www/vhosts/rondea.com/httpdocs/wp-content/themes/rondea-2-0/single-article.php on line 88

Notice: Undefined variable: all_related in /var/www/vhosts/rondea.com/httpdocs/wp-content/themes/rondea-2-0/single-article.php on line 88

Related Products

Notice: Undefined variable: all_related in /var/www/vhosts/rondea.com/httpdocs/wp-content/themes/rondea-2-0/single-article.php on line 91

Warning: Invalid argument supplied for foreach() in /var/www/vhosts/rondea.com/httpdocs/wp-content/themes/rondea-2-0/single-article.php on line 91