Nvidia introduced its Arm-based Grace CPU architecture that the company will use to power two new AI supercomputers. Nvidia says its new chips deliver 10X more performance than today’s fastest servers in AI and HPC workloads.

The new Grace CPU architecture comes powered by unspecified “next-generation” Arm Neoverse CPU cores paired with LPDDR5x memory that pumps out 500 GBps of throughput, along with a 900 GBps NVLink connection to an unspecified GPU for the leading-edge devices. Nvidia also revealed a new roadmap (below) that shows a “Grace Next” CPU coming in 2025, along with a new “Ampere Next Next” GPU that will arrive in mid-2024.

Notably, Nvidia named the Grace CPU architecture after Grace Hopper, a famous computer scientist. Nvidia is also rumored to be working on its chiplet-based Hopper GPUs, which would make for an interesting pairing of CPU and GPU codenames that we could see more of in the future.

Nvidia’s pending ARM acquisition, which is still winding its way through global regulatory bodies, has led to plenty of speculation that we could see Nvidia-branded Arm-based CPUs. Nvidia CEO Jensen Huang confirmed that was a distinct possibility, and while the first instantiation of the Grace CPU architecture doesn’t come as a general-purpose design in the socketed form factor we’re accustomed to (instead coming mounted on a board with a GPU), it is clear that Nvidia is serious about deploying its own Arm-based data center CPUs.

Nvidia hasn’t shared core counts or frequency information yet, which isn’t entirely surprising given that the Grace CPUs won’t come to market until early 2023. The company did specify that these are next-generation Arm Neoverse cores. Given what we know about Arm’s current public roadmap (slides below), these are likely the V1 Platform ‘Zeus’ cores, which are optimized for maximum performance at the cost of power and die area.

Image 1 of 3

Image 2 of 3

Image 3 of 3

Chips based on the Zeus cores will come in either 7nm or 5nm flavors and offer a 50% increase in IPC over the current Arm N1 cores. Nvidia says its Grace CPU will have plenty of performance, with a 300+ projected score in the SPECrate_2017_int_base benchmark. That’s impressive for a freshman effort — AMD’s EPYC Milan chips, the current performance leader in the data center, have posted results ranging from 382 to 424, putting Grace more on par with the 64-core AMD Rome chips. Given Nvidia’s ’10X’ performance claims relative to existing servers, it appears the company is referring to primarily GPU-driven workloads.

The Arm V1 platform supports all the latest high-end tech, like PCIe 5.0, DDR5, and either HBM2e or HBM3, along with the CCIX 1.1 interconnect. It appears that, at least for now, Nvidia is utilizing its own NVLink instead of CCIX to connect its CPU and GPU.

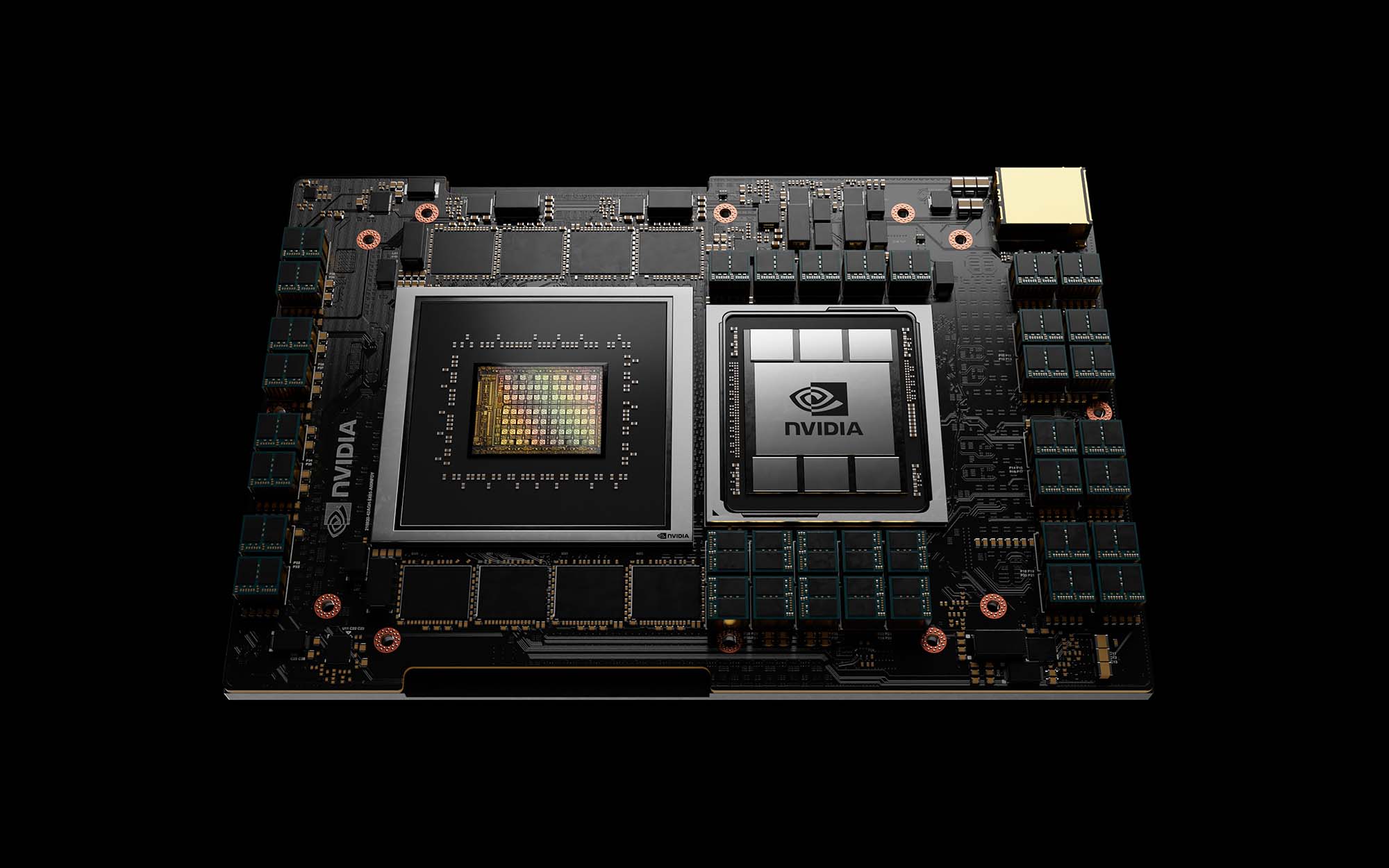

As we can see above, the first versions of the Nvidia Grace CPU will come mounted as a BGA package (meaning it won’t be a socketed part like traditional x86 server chips) and comes flanked by what appear to be eight packages of LPDDR5x memory. Nvidia says that LPDDR5x ECC memory provides twice the bandwidth and 10x better power efficiency over standard DDR4 memory subsystems.

Nvidia’s next-generation NVLink, which it hasn’t shared many details about yet, connects the chip to the adjacent CPU with a 900 GBps transfer rate (14X faster), outstripping the data transfer rates that are traditionally available from a CPU to a GPU by 30X. The company also claims the new design can transfer data between CPUs at twice the rate of standard designs, breaking the shackles of suboptimal data transfer rates between the various compute elements, like CPUs, GPUs, and system memory.

Image 1 of 3

Image 2 of 3

Image 3 of 3

The graphics above outline Nvidia’s primary problem with feeding its GPUs with enough bandwidth in a modern system. The first slide shows the bandwidth limitation of 64 GBps from memory to GPU in an x86 CPU-driven system, with the limitations of PCIe throughput (16 GBps) exacerbating the low throughput and ultimately limiting how much system memory the GPU can utilize fully. The second slide shows throughput with the Grace CPUs: With four NVLinks, throughput is boosted to 500 GBps, while memory-to-GPU throughput increases 30X to 2,000 GBps.

The NVLink implementation also provides cache coherency, which brings the system and GPU memory (LPDDR5x and HBM) under the same memory address space to simplify programming. Cache coherency also reduces data movement between the CPU and GPU, thus increasing both performance and efficiency. This addition allows Nvidia to offer similar functionality to AMD’s pairing of EPYC CPUs with Radeon Instinct GPUs in the Frontier exascale supercomputer, and also Intel’s combination of the Ponte Vecchio graphics cards with the Sapphire Rapids CPUs in the Aurora supercomputer, another world-leading exascale supercomputer.

Nvidia says this combination of features will reduce the amount of time it takes to train GPT-3, the world’s largest natural language AI model, with 2.8 AI-exaflops Selene, the world’s current fastest AI supercomputer, from fourteen days to two.

Nvidia also revealed a new roadmap that it says will dictate its cadence of updates over the next several years, with GPUs, CPUs (Arm and x86), and DPUs all co-existing and evolving on a steady cadence. Huang said the company would advance each architecture every two years, with a possible “kicker” generation in between, which likely will consist of smaller advances to process technology rather than architectures.

Image 1 of 4

Image 2 of 4

Image 3 of 4

Image 4 of 4

The US Department of Energy’s Los Alamos National Laboratory will build a Grace-powered supercomputer. This system will be built by HPE (the division formerly known as Cray) and will come online in 2023, but the DOE hasn’t shared many details about the new system.

The Grace CPU will also power what Nvidia touts as the world’s most powerful AI-capable supercomputer, the Alps system that will be deployed at the Swiss National Computing Center (CSCS). Alps will primarily serve European scientists and researchers when it comes online in 2023 for workloads like climate, molecular dynamics, computational fluid dynamics, and the like.

Given Nvidia’s interest in purchasing Arm, it’s natural to expect the company to begin broadening its relationships with existing Arm customers. To that effect, Nvidia will also bring support for its GPUs to Amazon Web Service’s powerful Graviton 2 Arm chips, which is a key addition as AWS adoption of the Arm architecture has led to broader uptake for cloud workloads.