Intel’s graphics guru Raja Koduri on Wednesday once again teased his Twitter followers with an animated image of how the company’s Ponte Vecchio compute GPU will be made. He posted the teaser just a day after it was revealed that the Leibniz Supercomputing Centre will use Intel’s upcoming Sapphire Rapids CPUs as well as Ponte Vecchio compute GPUs based on the Xe-HPC architecture starting in 2022.

Some Ponte Vecchio Eye Candy pic.twitter.com/fCG2rZrozIMay 5, 2021

See more

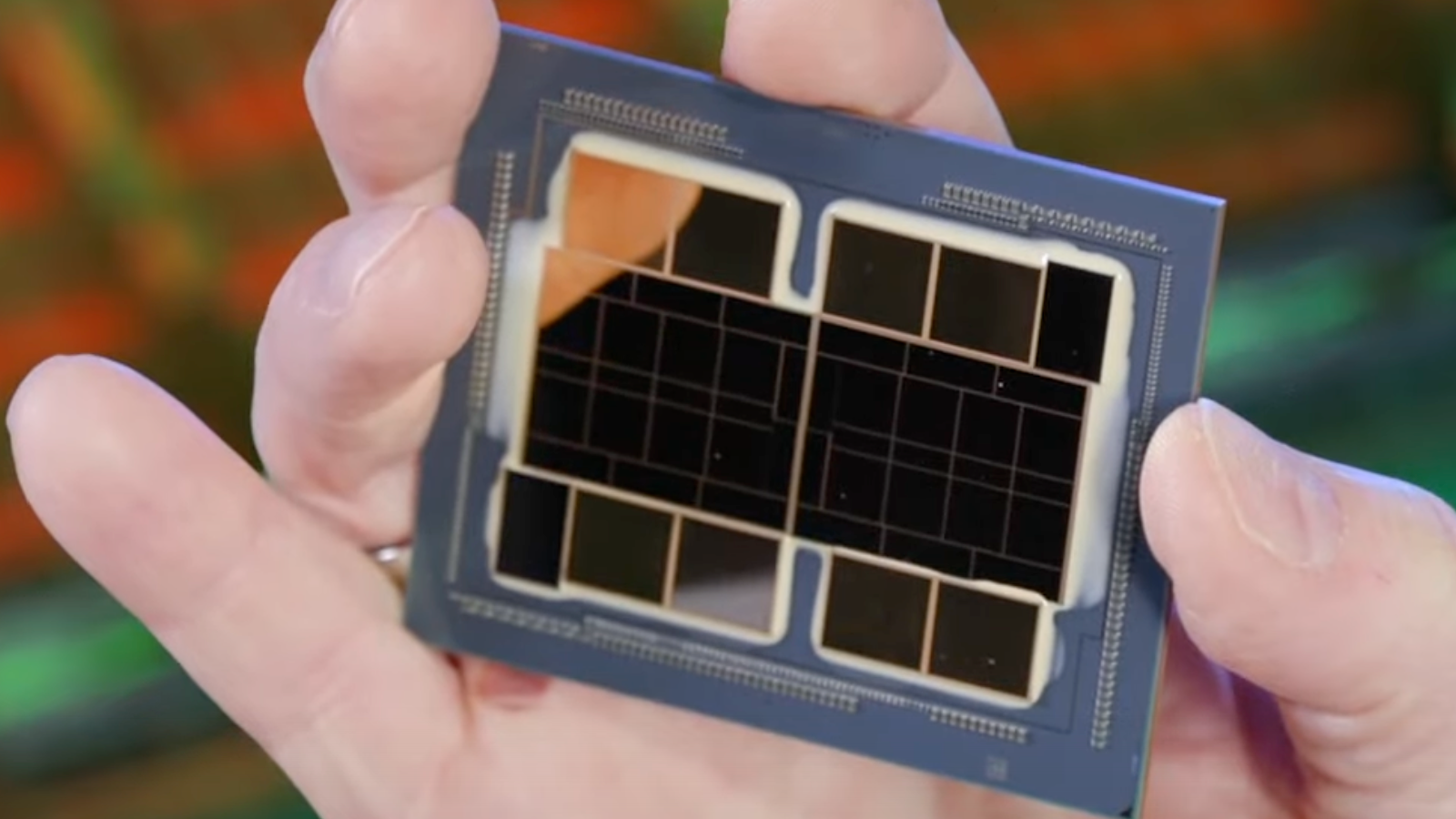

Intel’s Ponte Vecchio will be one of the industry’s most complex chips ever made when it starts shipping in 2022. The compute GPU packs over 100 billion of transistors over 47 different tiles and promises to offer PetaFLOPS class AI performance. Building a GPU of such complexity is an extreme challenge, so it is understandable that Koduri and other Intel engineers are extremely proud.

Hardwareluxx reports that that the Leibniz Supercomputing Centre in Munich, Germany, will adopt Intel’s 4th Generation Xeon Scalable ‘Sapphire Rapids’ CPUs and Ponte Vecchio compute GPUs for its phase two SuperMUC-NG supercomputer due to be delivered in 2022. The system is also expected to use 1PB of Distributed Asynchronous Object Storage (DAOS based on Optane DC SSDs and Optane DC Persistent Memory.

There is no word about performance expected from the new system, but the phase one SuperMUC-NG uses 12,960 Intel Xeon Platinum 8174 processors with 24 cores each. The system offers Linpack (Rmax) performance of 19,476.6 TFLOPS and Linpack Theoretical Peak (Rpeak) performance of 26,873.9 TFLOPS. At present, the supercomputer does not use any GPU accelerators for compute, but engineers from the Leibniz Supercomputing Centre reportedly use some Nvidia Tesla boards for testing their programs.

Without a doubt, the Ponte Vecchio GPU looks very promising, but so far it has officially won only one design with Argonne National Laboratory’s Aurora supercomputer. The system is going to be one of the industry’s first supercomputers to feature over 1 ExaFLOPS FP64 performance and is going to be used to solve some of the world’s most complex compute challenges.