Image 1 of 2

Image 2 of 2

Cerebras Systems today announced that it has created what it bills as the first brain-scale AI solution – a single system that can support 120-trillion parameter AI models, beating out the 100 trillion synapses present in the human brain. In contrast, clusters of GPUs, the most commonly-used device for AI workloads, typically top out at 1 trillion parameters. Cerebras can accomplish this industry-first with a single 850,000-core system, but it can also spread workloads over up to 192 CS-2 systems with 162 million AI-optimized cores to unlock even more performance.

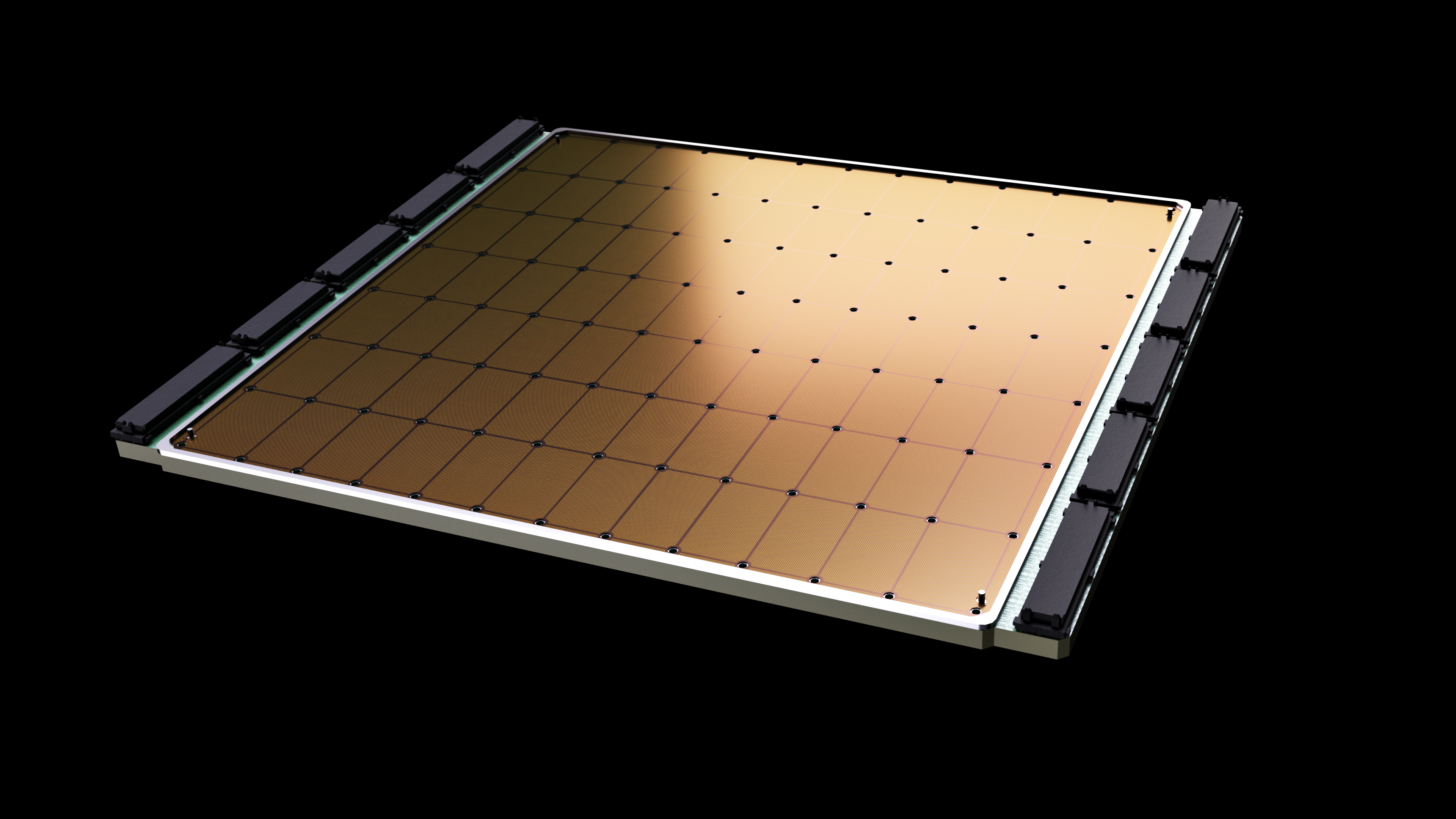

As the fastest AI processor known to humankind, the Cerebras CS-2 is undoubtedly one of the most unique semiconductor devices on the planet. With 46,225 mm2 of silicon, 2.6 trillion transistors, and 850,000 AI-optimized cores all packed on a single wafer-sized 7nm processor, its compute capability is truly in a league of its own.

However, each massive chip comes embedded in a single CS-2 system, and even though it has plenty of memory, that can limit the size of AI models. The chip has 40 GB of on-chip SRAM memory, but adding a new external cabinet with additional memory allowed the company to run larger brain-scale AI models.

Scalability is also a challenge. With 20 petabytes of memory bandwidth and 220 petabits of aggregate fabric bandwidth, communication between multiple chips is challenging using traditional techniques that share the full workload between processors. The system’s extreme computational horsepower also makes scaling performance across multiple systems especially challenging — especially in light of the chip’s 15kW of power consumption. That requires custom cooling and power delivery, making it nearly impossible to cram more wafer-sized chips into a single system.

Cerebras’ multi-node solution takes a different approach: It stores model parameters off-chip in a MemoryX cabinet while it keeps the model on-chip. This not only allows a single system to compute larger AI models than ever possible before, but it also combats the typical latency and memory bandwidth issues that often restrict scalability with groups of ‘smaller’ processors, like GPUs. In addition, Cerebras says this technique allows the system to scale performance near-linearly across up to 192 CS-2 systems.

The company uses its SwarmX Fabric to scale workloads across nodes. This interconnect consists of the company’s AI-optimized communication fabric that has Ethernet at the PHY level but runs a customized protocol to transfer compressed and reduced data across the fabric. Each SwarmX switch supports up to 32 Cerebras CS-2 systems and serves up nearly a terabit of bandwidth per node.

The switches connect the systems to the MemoryX box, which comes with anywhere from 4TB to 2.4PB of memory capacity. The memory comes as a mix of flash and DRAM, but the company hasn’t shared the flash-to-DRAM ratio. This single box can store up to 120 trillion weights and also has a ‘few’ x86 processors to run the software and data plane for the system.

Image 1 of 27

Image 2 of 27

Image 3 of 27

Image 4 of 27

Image 5 of 27

Image 6 of 27

Image 7 of 27

Image 8 of 27

Image 9 of 27

Image 10 of 27

Image 11 of 27

Image 12 of 27

Image 13 of 27

Image 14 of 27

Image 15 of 27

Image 16 of 27

Image 17 of 27

Image 18 of 27

Image 19 of 27

Image 20 of 27

Image 21 of 27

Image 22 of 27

Image 23 of 27

Image 24 of 27

Image 25 of 27

Image 26 of 27

Image 27 of 27

Naturally, there are only a few hundred customers in the world that could use such systems, but Cerebras aims to simplify running AI models that easily eclipse the size of any existing model. Many of those customers probably include military and intelligence communities that could use these systems for any multitude of purposes, including nuclear modeling, but Cerebras can’t divulge several of its customers (for obvious reasons). We do know that the company collaborates with the Argonne National Laboratory, which commented on the new systems:

“The last several years have shown us that, for NLP models, insights scale directly with parameters– the more parameters, the better the results,” says Rick Stevens, Associate Director, Argonne National Laboratory. “Cerebras’ inventions, which will provide a 100x increase in parameter capacity, may have the potential to transform the industry. For the first time we will be able to explore brain-sized models, opening up vast new avenues of research and insight.”