by Jordi Bercial Yesterday at 20: 58 1

by Jordi Bercial Yesterday at 20: 58 1

As we can read on the Polish portal PurePC, it seems that the suspicions about a secret pact in which the Laptop manufacturers would only mount NVIDIA mid-range and low-end GPUs in laptops with an AMD processor are true , and although there had been speculation and rumors previously, It seems that finally there was a agreement between Intel and NVIDIA that blocked the development of laptops with AMD Renoir processors and GPUs above RTX 2070.

Many will remember when the reason that came to light was the limitation of PCI Express lanes by the CPU, where a maximum d e 8 PCI Express 3.0 lanes was considered too low to be able to connect an RTX 2070 or an RTX 2080 , something that has proven to be a cover for this real reason, which may come with legal consequences.

This information comes from one of the OEMs to the public, who has spoken anonymously, and it is recognized that this situation was totally artificial, probably in exchange for large sums of money , since high-performance laptops with processors AMD Ryzen and high-end NVIDIA GPUs have been sought, unsuccessfully, by enthusiasts all over the world.

End of Article. Tell us something in the Comments or come to our Forum!

Avid technology and electronics enthusiast . I messed around with computer components almost since I learned to ride. I started working at Geeknetic after winning a contest on their forum for writing hardware articles. Drift, mechanics and photography lover. Don’t be shy and leave a comment on my articles if you have any questions.

Sponsored Content

Chuwi’s new budget notebook features a 3200 x 1800 Samsung-made pixels, and low-end hardware to keep prices down. Excellent for productivity and multimedia, at low prices

by Editorial team published 19 January 2021 , at 12: 29 in the Portable channel

Chuwi

Chuwi recently launched a new low-cost notebook, called HeroBook Pro + and equipped with 3K resolution screens. The new laptop uses a Samsung panel from 03, 3 “high definition, with 3200 x 1800 pixels and a density of 227 ppi.

Chuwi HeroBook Pro + also makes use of a Intel Celeron J processor 3455, necessary to reduce costs, combined with a memory compartment 8 GB of RAM and 128 GB of storage space (plus other configurations possible for the user). The price is 269 dollars, with the expected sale – according to the company – in the “main platforms”.

Chuwi resumes a nomenclature dear to Apple to imply that the display of Chuwi HeroBook Pro + has a definition comparable to the one that can solve the human eye. Usually the prices of notebooks with this feature are around 500 dollars, while Chuwi proposes it at approximately 250 dollars . Furthermore, the Chinese brand has chosen a Samsung display capable of offering high color saturation and a good level of visual rendering for a product that can be used effectively both in the reproduction of audiovisual content and in the editing of photographs.

The processor Intel Celeron J 3455 can operate up to the frequency of 2.3GHz, and can meet the needs of productivity and multimedia. It implements the Intel HD Graphics GPU 250, with support for 4K decoding via hardware, even online. The 8GB system memory is offered in LPDDR4 chip, while on the storage side we have an eMMC type flash memory expandable through a memory card. All in a very light body, which pushes the scale needle up to 1, 16 Kg, thanks to the use of resistant and long-lasting polycarbonate.

Chuwi HeroBook Pro + in pills

All the information about Chuwi HeroBook Pro + can be found on the official website.

Acer is announcing five new laptops aimed at schools today, consisting of four Chromebooks and one Windows convertible. Two of the Chromebooks are Intel-based convertibles, with screens that spin around to turn them into laptops, while the other two feature a more traditional design and are powered by Arm-based processors. All five laptops are designed to be durable, with spill-resistant keyboards and components tested to MIL-STD 810H military durability standards.

The two convertible Chromebooks are the Acer Spin 512 and Spin 511. They’re powered by Intel processors (specifically the N4500 and N5100). The Spin 511 has a 11.6-inch HD 16:9 display, while the Spin 512 has a 12-inch HD+ 3:2 display. Both come with up to 64GB of storage, and 8GB of RAM, while battery life is rated up to 10 hours.

Both the Spin 512 and Spin 511 have antimicrobial scratch-resistant displays, but the Spin 512 also features a similar antimicrobial coating on its keyboard and touchpad. The Chromebooks will be available in Europe in March and North America in April. The Spin 512 starts at $429.99 (€399), while the Spin 511 is slightly cheaper at $399.99 (€369).

Next up are the 11-inch Arm-based Chromebook 511 and Chromebook 311, which also have durable designs. The 511 is powered by a Qualcomm Snapdragon 7c processor, features 4G LTE connectivity and can go for up to 20 hours on a charge. Meanwhile, the 311 has a Mediatek MT8183 processor and also runs for up to 20 hours on a charge. The 311 launches this month in North America and is priced starting at $299.99, while the 511 is coming in April starting at $399.99. They’ll launch in Europe in March for €269 and €399 respectively.

Finally, there’s a $329.99 (€409) Windows laptop, the TravelMate Spin B3. It also includes a durable keyboard that can flip around to turn the laptop into a tablet, and is powered by up to an Intel Pentium Silver processor with as much as 12 hours of battery life. The Spin B3 comes with an antimicrobial display as standard, and the option of having a similar coating on its keyboard and touchpad. The laptop will launch in North America in April, and Europe at some point in Q2.

by Jordi Bercial Ago 35 minutes ago …

As we can read in the Polish portal PurePC, it seems that suspicions about a secret pact in which the laptop manufacturers would only mount NVIDIA mid-range and low-end GPUs in laptops with AMD processor are true , and, although there had been speculation and rumors previously, it seems that finally there was a agreement between Intel and NVIDIA that blocked the development of laptops with AMD Renoir processors and GPUs above RTX 2070.

Many will remember when the reason that came to light was the limitation of PCI Express lanes by the CPU, where a maximum of 8 PCI Express 3.0 lanes e considered too low to be able to connect an RTX 2070 or an RTX 2080 , something that has proven to be a cover for this real reason, which may come with legal consequences.

This information comes from one of the OEMs to the public, who has spoken anonymously, and it is recognized that this situation was totally artificial, probably in exchange for large sums of money , because high-performance laptops with processors AMD Ryzen and high-end NVIDIA GPUs have been searched, without success , by enthusiasts around the world.

End of Article. Tell us something in the Comments or come to our Forum!

Avid technology and electronics enthusiast . I messed around with computer components almost since I learned to ride. I started working at Geeknetic after winning a contest on their forum for writing hardware articles. Drift, mechanics and photography lover. Don’t be shy and leave a comment on my articles if you have any questions.

You’ve come to the right place if you’re wondering what is G-Sync. In short, Nvidia G-Sync is a type of display technology used in certain PC monitors, laptops and TVs to fight screen tearing, stuttering and juddering, especially during fast-paced games or video. G-Sync only works when the display is connected to a system using a compatible Nvidia graphics card (including third-party branded ones). So if you don’t yet have a compatible card, be sure to check out our graphics card buying guide, as well as our in-depth comparison of the features of AMD and Nvidia GPUs. And if you’re after a portable G-Sync experience, check out our Gaming Laptop Buying Guide.

Nvidia introduced G-Sync in 2013, and its biggest rival is AMD FreeSync. But the answer to “what is G-Sync is getting increasingly complex. There are now three tiers of G-Sync: G-Sync, G-Sync Ultimate and G-Sync Compatible.

Screen tearing is an unwelcome effect on the image (see photo above). It’s the result of the game’s framerate (the rate at which image frames display) not matching the monitor’s refresh rate (the frequency at which a display’s image redraws). G-Sync displays have a variable refresh rate (also known as VRR or a dynamic refresh rate) and can sync its minimum and maximum refresh rates with the framerate of the system’s Nvidia graphics card. That refresh rate range can go as high as the monitor’s maximum refresh rate. This way, you see images right when they’re rendered, while also fighting input lag or delays between when you move your mouse (for recommendations, see our Best Gaming Mouse article) and when the cursor actually moves.

Check out Nvidia’s video below for an idea of what G-Sync looks like:

FreeSync is AMD’s answer to G-Sync, and both use VESA’s Adaptive-Sync protocol. Just like you need an Nvidia graphics card to use G-Sync, you need an AMD graphics card to use FreeSync.

There are some key differences. One of the standouts is that FreeSync work over HDMI and DisplayPort, (which also works over USB Type-C), but G-Sync only works with DisplayPort, unless you’re using a G-Sync Compatible TV (more on that below). However, Nvidia has said that it’s working on changing this. For more on the two ports and which is best for gaming, see our DisplayPort vs. HDMI analysis.

In terms of performance, our testing has shown minute differences between the two. For an in-depth look at the variances in performance, check out our G-Sync vs. FreeSync article and see the results.

While both G-Sync and FreeSync are based on Adaptive-Sync, G-Sync and G-Sync Ultimate also require usage of a proprietary Nvidia chip. Monitor vendors are required to buy this in place of the scaler they’d typically buy if they want their display certified for G-Sync or G-Sync Ultimate. FreeSync, on the other hand, is an open standard, and FreeSync monitors are generally cheaper than G-Sync or G-Sync Ultimate ones. However, G-Sync Compatible monitors don’t require this chip and many FreeSync monitors are also G-Sync Compatible.

G-Sync comes in three different flavors. G-Sync is the standard, G-Sync Ultimate targets those with HDR content and G-Sync Compatible is the lowest-priced form, since it doesn’t require display makers to incorporate/buy Nvidia’s hardware. Many G-Sync Compatible displays are also FreeSync-certified.

| G-Sync | G-Sync Ultimate | G-Sync Compatible |

|---|---|---|

| Validated for artifact-free performance | Validated for artifact-free performance | Validated for artifact-free performance |

| Certified with over 300 tests | Certified with over 300 tests | |

| Certified for 1,000 nits brightness with HDR |

Here you can find a list of every G-Sync, G-Sync Ultimate and G-Sync Compatible monitor.

A monitor’s G-Sync also works with HDR content, but things will look better if that monitor has G-Sync Ultimate, formerly called G-Sync HDR (for HDR recommendations, check out our article on how to pick the best HDR monitor).

Unlike regular G-Sync, Nvidia certifies G-Sync Ultimate displays for ultra-low latency, multi-zone backlights, DCI-P3 color gamut coverage, 1,000 nits max brightness with HDR video or games and to run at its highest refresh rate at its max resolution — all thanks to “advanced” Nvidia G-Sync processors. Keep in mind these displays are typically BFGD (big format gaming displays) and, therefore, on the pricier end.

To use a G-Sync monitor with a desktop PC, you need:

To use a G-Sync monitor with a laptop, you need:

To use a G-Sync Ultimate monitor with a desktop PC, you need:

To use a G-Sync Ultimate monitor with a laptop, you need:

In 2019, Nvidia started testing and approving specific displays, including ones with other types of Adaptive-Sync technology, like FreeSync, to run G-Sync. These monitors are called G-Sync Compatible. Confirmed by our own testing, G-Sync Compatible displays can successfully run G-Sync with the proper driver and a few caveats even though they don’t have the same chips as a G-Sync or G-Sync Ultimate display.

Some things Nvidia confirms you can’t do with G-Sync Compatible displays compared to regular G-Sync displays are ultra low motion blur, overclocking and variable overdrive.

You can find the full list of G-Sync Compatible monitors at the bottom of Nvidia’s webpage.

We’ve also found that numerous FreeSync monitors can run G-Sync Compatibility even though they’re not certified to do so. To learn how to run G-Sync Compatibility, see our step-by-step instructions for how to run G-Sync on a FreeSync monitor, which includes details on the small number of limitations you’ll face. And for what Nvidia and monitor makers think about running G-Sync on non-certified monitors, check out article Should You Care if Your Monitor Is Certified G-Sync Compatible?

As of 2021, there are numerous LG-branded G-Sync Compatible OLED TVs. They work via connection over HDMI to a desktop or laptop with an Nvidia RTX or GTX 16-series graphics card. You also need to follow the instruction for downloading the proper firmware. Nvidia said it’s working on getting more TVs that work with G-Sync Compatibility over HDMI in the future.

Here’s every G-Sync Compatible TV announced as of this writing:

This article is part of the Tom’s Hardware Glossary.

Further reading:

A capitol rioter may have stolen Speaker Nancy Pelosi’s laptop with plans to sell it to the Russian government, according to an FBI affidavit filed on Sunday. The affidavit charges Riley June Williams with unlawful entry and impeding government functions, based on extensive video record placing her at the scene of the Capitol riot. But the affidavit also uncovers an apparent plan to steal Speaker Pelosi’s laptop and convey it to a contact in Russia, who would then make contact with the country’s government.

Williams was identified by UK media over the weekend, and officers confirmed the identification with her driver’s license photo (on file with the Pennsylvania Department of Transportation) and in an in-person interview with her parents. Notably, public videos show Williams directing rioters to the general location of Pelosi’s office, consistent with the idea that she intended to ransack the office.

According to the affidavit, a former associate of Williams told police she had planned to steal Speaker Pelosi’s laptop with the intention of selling it to the Russian government.

“[The witness] stated that Williams intended to send the computer device to a friend in Russia, who then planned to sell the device to SVR, Russia’s foreign intelligence service,” the affidavit reads. “According to [the witness], the transfer of the computer device to Russia fell through for unknown reasons and Williams still has the computer device or destroyed it. This matter remains under investigation.”

Reuters has previously reported that a laptop was stolen from Pelosi’s office. According to an aide, the laptop was stationed in a conference room and “was used for presentations.”

Device theft has been a significant concern for government IT workers in the wake of the capitol riot, which broke long-standing security procedures around congressional offices. The idea of devices being stolen or tampered with was viewed as a “nightmare scenario” in the wake of the attack.

“I don’t think I’d sleep well until the networks were rebuilt from scratch,” said one former House IT official on Twitter, “and every computer wiped and the internals visually inspected before being put back into service.”

Williams remains at large, despite the ongoing investigation and public identification. She has discontinued her phone number and deleted social media accounts on Facebook, Instagram, Twitter, Reddit, Telegram and Parler. After returning from the raid, she packed a bag and told her mother she would be gone “for a couple weeks,” the affidavit reads. “Williams did not provide her mother any information about her intended destination.”

Idriz Velghe 12 January 2021 19: 24 13 Comments

Zen 3 for laptops AMD has new laptop processors featuring Zen 3 architecture were unveiled at the CES trade show. In addition to the U models for thin notebooks, the company has also unveiled the new HX sku for gaming laptops. The new video cards for both laptops and the desktop were also discussed. In addition, there is a hint to the new generation of Epyc server chips.

De Ryzen 7 5800 U is the top model of the economical U-series. With 8 cores and 16 threads will be the title of the only x 86 – octacore claimed in the ‘ultrathin’ class . According to AMD CEO Lisa Su, the new apu performs 7 to 44% better than Intel Core i7 – 1185 G7, depending on the workload. In addition to better performance, the U-line must also ensure a longer battery life. Up to 21 hours claimed when playing video.

In addition, two HX processors have been unveiled, the Ryzen 9 5900 HX and 5980 HX. They feature 8 cores and 16 threads, and a total of 20 MB of L2 and L3 cache. The difference is in the clock speed. The 5900 HX boost up to 4.6 GHz , the 5980 HX achieves a maximum frequency of 4 , 8 GHz. Both SKUs have a tdp of ‘ according to AMD W + ‘, as it is possible to overclock them.

During the presentation the 5900 HX is compared to the Core i9 – 10980 HK, the best Intel has to offer in the field of mobile gaming processors. The Ryzen chip performs better in all areas. For example, he scores 15% higher in Cinebench R’s single-threaded test 20, the 5900 HX performs 19% better than its Intel counterpart in 3DMark Fire Strike Physics.

The first laptops with Ryzen 5000 – CPUs must already will be available from February. AMD expects such a 250 different models are coming. At the 4000- and 3000 – ranges this was respectively 150 and 70.

After the end of the presentation, AMD will full lineup of laptop processors posted on its website. It can again be deduced that the 5000 – series consists of both Zen 2 and Zen 3 chips, confirming previous rumors.

Processor Cores / Threads Base Clock Boost Clock Cache TDP Architecture AMD Ryzen 9 5980 HX 8C / 17 T 3.3 GHz 4.8 GHz 20 MB 45 + W Zen 3 AMD Ryzen 9 5980 HS 8C / 16 T 3.0 GHz 4.8 GHz 20 MB 35 W Zen 3 AMD Ryzen 9 5900 HX 8C / 16 T 3.3 GHz 4.6 GHz 20 MB 45 + W Zen 3 AMD Ryzen 9 5900 HS 8C / 17 T 3.0 GHz 4.6 GHz 21 MB 35 W Zen 3 AMD Ryzen 7 5800 H 8C / 16 T 3.2 GHz 4.4 GHz 20 MB 45 W Zen 3 AMD Ryzen 7 5800 HS 8C / 16 T 2.8 GHz 4.4 GHz 20 MB 35 W Zen 3 AMD Ryzen 5 5600 H 6C / 12 T 3.3 GHz 4.2 GHz 19 MB 45 W Zen 3 AMD Ryzen 5 5600 HS 6C / 12 T 3.0 GHz 4.2 GHz 19 MB 35 W Zen 3 AMD Ryzen 7 5900 U 8C / 16 T 1.9 GHz 4.4 GHz 20 MB 15 W Zen 3 AMD Ryzen 7 5700YOU 8C / 16 T 1.8 GHz 4.3 GHz 12 MB 15 W Zen 2 AMD Ryzen 5 5600YOU 6C / 12 T 2.3 GHz 4.2 GHz 19 MB 15 W Zen 3 AMD Ryzen 5 5500YOU 6 C / 13 T 2.1G Hz 4.0 GHz 11 MB 16 W Zen 2 AMD Ryzen 3 5300 You 4C / 8T 2.6 GHz 3.8 GHz 6MB 15 W Zen 2

Idriz Velghe 23 December 2020 16: 53 29 Comments

Introduction Dataminer Tum_Apisak has a series of Geekbench results posted to Twitter. It concerns different laptop processors from both Intel and AMD. Thanks to these new benchmarks, it is possible to compare the next generations of the major processor manufacturers.

Two new Intel Tiger Lake SKUs have emerged. These are processors from the presumed H 35 series, and will receive a maximum of 4 cores a tdp not exceeding 35 W. Intel is expected to announce this series at the CES show in January. The strongest H-series processors will probably have 8 cores, and a tdp of 45 watts. It is rumored that Intel is still working hard on these models. They are therefore expected at the earliest in the second quarter of next year.

In addition to AMD and Intel, Nvidia will also hold an event during the CES fair of 2021.

In addition, the next generation of laptop chips has been emerging from the red camp for some time now, in the form of product pages that appear at retailers or spotted benchmark results. Now that the lower segmented Ryzen 5 5600 H has surfaced, it becomes more clear what the Cezanne lineup looks like. Also AMD would be frugal Ryzen 5000 U and high end 5000 To announce H processors at the CES trade show, which takes place in three weeks time.

As both processor series ( have not yet been officially announced, there is no guarantee that the surfaced specifications are final. These figures are thus always susceptible to change. Yet they give an indication of the processor landscape in the field of laptops in 2021.

Qualcomm some time ago admitted that the newest ARM Apple M1 chip is a perfect sign that computers are going in the right direction.At that time, it was not known yet that Qualcomm was working on something which is going to rival the M1. New reports from the WinFuture website reveal that the system, so far called Snapdragon SC 8280, is to contain more cores than those present in the PC Snapdragon 8cx, which was announced in September last year . It seems that the new chip is already being tested on a notebook with 14 – inch display and has dimensions 20 x 17 millimeters. The whole thing is also supposed to support cellular connectivity.

The test platform is equipped with Snapdragon X 55 5G modem, but whether the modems is integrated into the chipset or installed separately on the motherboard is something we’ll learn in the future. According to reports, two memory variants are also tested. One platform has 8 GB of LPDDR5 RAM, while the other supports 32 GB of LPDDR4X RAM (these are the maximum capabilities of this chip). Mac computers with Apple M1 chips are currently limited to 16 GB of memory.

So as you can see, when it comes to numbers, Snapdragon SC 8280 has an undeniable advantage. However, Apple does not yield when it comes to developing more powerful ARM-based systems. According to the latest reports, Apple’s next proprietary chip will consist of 12 cores. Looking at how the M1 easily beats more power-hungry chips, using less power, and also impressive in the GPU section, the Qualcomm team should roll up their sleeves and present a competitor without delay.

Source: WinFuture

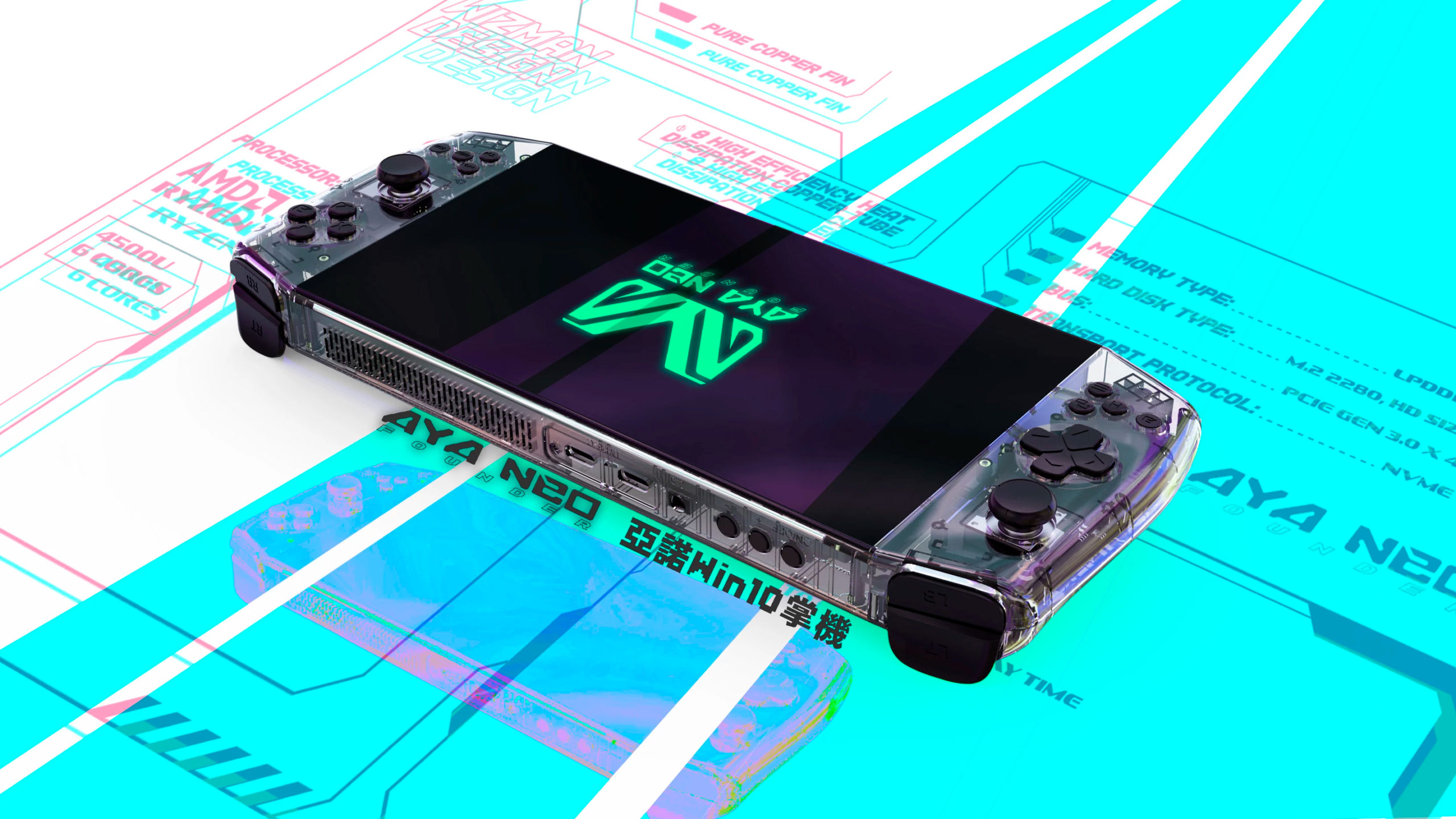

The creator of the Aya Neo Ryzen powered gaming tablet has decided to finally launch the Aya Neo worldwide. Next month, the Aya Neo will go on an Indiegogo campaign where you can purchase the tablet for $699. The campaign will last just 30 days.

The Aya Neo is a one-of-a-kind gaming tablet packed with full-blown mobile PC components in a form factor, similar to the Nintendo Switch. Specs-wise, the Aya Neo features a Zen 2 based hexa-core Ryzen 5 4500U (with its integrated Vega IGP), 16GB of LPDDR4X 4266Mhz RAM, an NVME SSD, an 800P display, and a 47WH battery.

While those specs don’t scream fast in a traditional gaming laptop, it’s not that bad for a tablet. In fact, the Aya Neo can pull off a stable 30 fps in Cyberpunk 2077 (at low settings) at its native resolution. With how small the Aya Neo is, that is quite an impressive feat. So if you’re looking for a tablet to play your PC games on, the Aya Neo is probably your best option right now.

(Pocket-lint) – Whether you have a monster PC, a MacBook, or just an everyday use-it-for-everything computer, there are many reasons why you’d need a monitor. That also means there are many things to consider when looking to buy one.

For some, only the biggest and sharpest panel will do. For others, it’s fast refresh rates for smooth visuals.

Whatever your reason for wanting a monitor, we’ve tested and rounded up some of the best monitors for all purposes available right now.

squirrel_widget_171642

The Ultrathin series is a fine example of a stylish, minimalist design for a great price.

Because it has a slim, sleek metal stand, you don’t get much movement or adjustment out of it as you might from a big, plastic thing with lots of moving parts. That means if you’re tall – you can’t have the monitor at eye level, although we found with the ability to tilt the screen backward, we could get it angled enough to be pretty comfortable to use.

As an HDR-ready monitor, it’s great, especially for those looking for something simple, capable, and stylish to complement their minimal desk setup. While it’s not as pin-sharp as 4K, there’s plenty of detail in the Quad HD resolution panel. IPS LCD based tech also means you get good viewing angles too.

The 60hz refresh rate, 5ms response time, and AMD Freesync means it’s a capable gaming monitor too. In terms of ports, you get two HDMI ports, a single 3.5mm output, and the power input.

Sure, we’d prefer it if it had a Thunderbolt port or a Displayport input, but as an all-rounder, it’s hard to criticize too much.

squirrel_widget_171643

LG’s 27-inch 4K monitor is great if you’re after a standard 16:9 ratio screen in an attractive package that’s as accurate as it is sharp.

The stand doesn’t move up and down; it’s fixed, so you can’t adjust the height to be at a more ergonomic level without putting it on top of a raised surface of some kind.

However, while the stand height isn’t adjustable, the screen can be tilted to ensure there’s some adjustment there.

At 27-inches, it’s a good size, and the quality of the IPS panel is pretty surprising at this price point. Viewing angles are tremendous, and we found colors, contrast, and details to be really well balanced across the board. It made a great panel for editing photos and videos on.

It’s not the most highly tuned for gaming, but with the addition of AMD’s Freesync, it’s good enough for most.

There are two HDMI 2.0 inputs, both support HDR sources and both can delivery 4K at 60Hz. Similarly, there’s a DisplayPort input of the same specification.

squirrel_widget_171644

If you’re after an all-round great ultrawide panel for content creation, media consumption, MacBook use, gaming and everything in between it’s hard to look past the BenQ EX3501R. It’s big, looks professional, feels solid, and offers a great experience regardless of what you want to use it for.

There’s a USB 3.1 Type-C port, so you’ll only need that one compatible cable as well as gain access to the USB hub. There are also both DisplayPort 1.4 and two HDMI 2.0 ports.

Although the AMD FreeSync is designed to ensure there’s no frame dropping or screen tearing/aliasing with AMD GPUs, we found the response time was fast enough that we were able to play games at 60fps with an Nvidia based GPU without issue.

Likewise, it made a great tool for editing video on long timelines thanks to the color reproduction and extra space granted by the ultra-wide ratio.

The solid build, classy chromed feet, and 60mm up-and-down travel ensured it looks good and is relatively adjustable. There’s no right/left movement, but with a curved monitor, you don’t tend to get that.

squirrel_widget_193424

A big, all-encompassing screen that does everything you need it to, the 32-inch 4K and HDR-capable BenQ EW3280U is a truly brilliant option.

On the back there are two HDMI 2.0 ports for fast action 4K input, as well as a DisplayPort connection and a USB-C port with 60W Power Deliver output and DisplayPort capabilities so you can charge and output display using a single USB-C cable from a compatible PC or Mac.

In theory, you could have a streaming box/stick, PC, console and your laptop all connected to the monitor and once and then switch between them using the included nifty little remote control.

The visual experience is generally great too. Being a big panel, it’s not as pin-sharp as some smaller 4K ones, but you get a lot more real estate to work with. It’s great for having multiple windows open, or working on video editing, and works just as well for watching movies or gaming.

squirrel_widget_193425

AOC has long offered monitors that are great value for money, and the U2790PQU is no exception. It’s a great 4K monitor for working on thanks to a host of useful features and a sharp 4K panel.

The stand is adjustable, so you can slide it up and down to raise or lower the height. Regardless of your eye height, you should easily be able to find an ergonomic level for you. Plus, the screen can be tilted between -5 and 45 degrees.

Being IPS LCD means the accuracy of the screen is good, and 4K means it’s plenty sharp enough for anyone. Plus, that 27-inch size is just about the sweet spot for people working from home, or at the office. It has slim bezels, giving it an almost edge-to-edge appearance too.

squirrel_widget_171645

At 32-inches diagonally, the BenQ PD3200U is a large display that’s not too enormous on your desk. It doesn’t take up much more space than a 27-inch iMac, thanks to having slimmer bezels.

The stand can swivel on its base 45 degrees to the left and to the right, and the screen can tilt from -5 to 20 degrees while also offering 150mm of height travel up and down.

The 4K UHD resolution IPS panel provides sharp details and crisp text and looks good from almost any angle. What’s more, with Dual View mode, you can have one side of the screen set up one way, and the other half calibrated for another source.

The only thing this display can’t do is keep up with ultra-fast gaming monitors. At 60Hz, it’s not the fastest screen going, but we were still able to play games at 60fps reliably, using the Nvidia GTX 1080Ti card.

Port wise, there’s plenty on offer with two HDMI 2.0 inputs as well as a DisplayPort 1.2 and mini DisplayPort 1.2 plus got four USB outputs, two USB inputs, a 3.5mm line-in, 3.5mm line out and an SD card reader.

Writing by Cam Bunton. Editing by Max Freeman-Mills.

A digital photo frame is a small screen that can sit on your desk in your office or in your kitchen displaying your favorite pictures, changing at regular intervals. The first commercial digital photo frame was introduced in the 1990s shortly after the digital camera. Digital photo frames made a comeback in popularity during 2020, perhaps because people were staying at home more.

In this tutorial, we’ll turn our Raspberry Pi into a digital photo frame using MagicMirror and the GooglePhotos module. Please note, we will skip installation of the 2-way mirror in the original Magic Mirror project. Consider this project, “Magic Mirror, without the mirror.”

Timing: Plan for a minimum of 1 hour to complete this project.

The majority of this tutorial is based on terminal commands. If you are not familiar with terminal commands on your Raspberry Pi, we highly recommend reviewing 25+ Linux Commands Raspberry Pi Users Need to Know first.

To get started with this project, you’ll need to set up a Google Photo Album. We suggest that you create a new Photo Album and add 5 to 10 photos. You can add more photos later. The more photos in your album, the longer your Raspberry Pi digital photo frame will take to load.

1. Connect your screen, mouse and keyboard to your Raspberry Pi.

2. Boot your Raspberry Pi. If you don’t already have a microSD card see our article on how to set up a Raspberry Pi for the first time or how to do a headless Raspberry Pi install.

3. Update Raspberry Pi OS. Open a terminal and enter:

sudo apt-get update && sudo apt-get upgrade4. Perform a basic installation of Magic Mirror on our Raspberry Pi with the instructions from the official Magic Mirror page. The commands should execute fairly quickly with npm install taking the longest depending on your Raspberry Pi model and internet speed. On a Raspberry Pi 4 with high speed internet, npm install took approximately 5 minutes to execute.

curl -sL https://deb.nodesource.com/setup_10.x | sudo -E bash -

sudo apt install -y nodejs

git clone https://github.com/MichMich/MagicMirror

cd MagicMirror

npm install

cp config/config.js.sample config/config.js

npm run startYour Raspberry Pi screen should now be filled with the default Magic Mirror screen.

5. Hit Ctrl-M to minimize and return to the Pi desktop.

6. Press Ctrl-C to stop Magic Mirror. This step is necessary to install the module that will show our Google Photos.

1. In the Pi terminal, install the Google Photos module.

cd ~/MagicMirror/modules

git clone https://github.com/eouia/MMM-GooglePhotos.git

cd MMM-GooglePhotos

npm install2. Open your Chromium browser and navigate to Google API Console. Login with your gmail account credentials. Full Link: https://console.developers.google.com/

3. Create a new project with a name of your choice. I named my project ‘MagicMirror123’.

4. Click ‘+ Create Credentials’ and select ‘OAuth client ID’.

5. For Authorization Type, select ‘TVs and Limited Input devices’ from the dropdown menu

6. Click ‘Create’ to create your OAuth client ID.

7. Click ‘OK’ to return to the main Credentials page for your project.

8. On the OAuth 2.0 Client ID you just created, click the down arrow to download your credentials.

9. Open your File Manager from your Raspberry Pi desktop, navigate to Downloads and rename the file you just downloaded from ‘client_secret-x.json’ to ‘credentials.json’.

10. Move your newly renamed ‘credentials.json’ file to your MagicMirror/modules/MMM-GooglePhotos folder.

11. In your Terminal, run the following command to authenticate your Pi.

cd ~/MagicMirror/modules/MMM-GooglePhotos

node generate_token.js12. Select your account when Google prompts you to authorize your device.

13. Scroll down, click ‘Advanced’ and then ‘Go to MagicMirror (unsafe)’.

14. Grant MagicMirror permissions on the following screens by clicking ‘Allow’ for each prompt.

15. Copy your Success code and paste it into your terminal. Press Enter.

16. Open your File Manager and navigate to /home/pi/MagicMirror/modules/MMM-GooglePhotos or type ‘ls’ in your Terminal (within the MMM-GooglePhotos directory) to view all files. If you see token.json as a file within this folder, you have successfully authorized your device to access your Google Photos.

17. Open the config.js file for editing in the /home/pi/MagicMirror/config folder.

18. In your config.js file, comment out all modules except for notifications by adding ‘/*’ before the Clock module and ‘*/’ after the Newsfeed module.

19. Add the code for MMM-GooglePhotos into the modules section of your config.js file.

{

module: "MMM-GooglePhotos",

position: "fullscreen_above",

config: {

albums: ["MagicMirror"], // Set your album name.

updateInterval: 1000 * 60, // minimum 10 seconds.

sort: "random", // "new", "old", "random"

uploadAlbum: null, // Only album created by `create_uploadable_album.js`.

condition: {

fromDate: null, // Or "2018-03", RFC ... format available

toDate: null, // Or "2019-12-25",

minWidth: null, // Or 400

maxWidth: null, // Or 8000

minHeight: null, // Or 400

maxHeight: null, // Or 8000

minWHRatio: null,

maxWHRatio: null,

},

showWidth: 800, // Set this to the resolution of your screen width

showHeight: 480, // Set this to the resolution of your screen height

timeFormat: "YYYY/MM/DD HH:mm", // Or `relative` can be used.

}

},20. In the config section of MMM-GooglePhotos, enter the name of the album you created at the beginning of this project in the ‘albums’ parameter.

21. Set the order in which you wish to view your photos in the ‘sort’ parameter.

22. Set the frequency to change the images in the ‘updateInterval’ parameter. The default is 60 seconds.

23. Set the resolution of the screen that you are using in the parameters ‘showWidth’ and ‘showHeight’. The default resolution for the 7” Raspberry Pi screen is 800 x 480.

24. Save your changes in config.js.

25. In your terminal, run the command ‘npm run start’ to restart your Magic Mirror. There will be a 30 second or more delay while your Pi pulls images from your Google Photos album. Your Pi will need to stay connected to the internet to display your images.

26. To stop Magic Mirror, press Ctrl-M followed by Ctrl-C in the terminal. You can continue to make adjustments in your config.js file until you are happy with the way that your images are displayed.

27. At this point, you can start adding more images to your Google Photos album. Your Raspberry Pi Magic Mirror digital photo frame should automatically update with any new images.

If you reboot your Pi, you’ll need to enter the command ‘npm run start’ each time you want to start your digital photo frame. Let’s set our Raspberry Pi to display our digital photo frame on boot.

1. Install PM2 by running the following commands in your Raspberry Pi terminal. PM2 is a daemon process manager to keep your applications running continuously. In this project we will utilize PM2 to continuously run Magic Mirror for our digital photo frame.

sudo npm install -g pm2

pm2 startup2. Your terminal will provide the next command. Copy and paste the command into your terminal.

sudo env PATH=$PATH:/usr/bin /usr/lib/node_modules/pm2/bin/pm2 startup systemd -u pi --hp /home/pi3. Create a shell script named mm.sh.

cd ~

nano mm.sh4. Within mm.sh enter the commands to start Magic Mirror.

cd ./MagicMirror

DISPLAY=:0 npm start5. Press Ctrl-X to exit, ‘y’ to Save mm.sh, and Enter to return to the Terminal.

6. Make mm.sh an executable file with the chmod command.

chmod +x mm.sh7. Start your Magic Mirror with PM2.

pm2 start mm.shYour Magic Mirror should automatically start running again. You can test your auto start functionality with a reboot now.

You may find that after setting up your automatic digital photo frame that your Raspberry Pi screen goes blank after 10 minutes. In this case, you can disable screen blanking.

1. Navigate to the Raspberry Pi Configuration menu. From your Raspberry Pi start menu, click ‘Preferences’ and select ‘Raspberry Pi Configuration’.

2. Toggle Screen Blanking to Disable on the Display tab. Select the ‘Display’ tab. For ‘Screen Blanking’ select Disable. Click OK.

3. Click Yes when you are prompted to Reboot.

If you’re looking for a gaming laptop that’s able to handle demanding titles or high-resolution gaming but don’t want the thickness, weight and loud fans that often come with gaming laptops, a PC running an Nvidia Max-Q graphics card may be the answer.

When shopping for a gaming laptop, you’ll probably run into models featuring some sort of Max-Q graphics card, such as an “RTX 2080 Max-Q.” Max-Q is a graphics card technology Nvidia first launched in 2017 that allows the rendering horsepower of the company’s higher-end graphics cards — previously reserved for desktop PCs or very bulky gaming laptops–to fit in a slimmer form factor that’s more like mainstream laptops. There are Max-Q versions of Nvidia’s GTX and RTX graphics cards, but there is no AMD equivalent to Nvidia’s Max-Q.

If you’re wondering about the unique name, the term Max-Q is one that Nvidia borrowed from the aerospace world. As Nvidia detailed in a blog post, Max-Q is when a spacecraft has its maximum dynamic pressure. Go figure.

But for PCs, Max-Q is about allowing laptops to run Nvidia graphics technology while still being thinner, quieter and more energy-efficient than other gaming laptops. In general, graphics cards require substantial heat management, which leads to bulky, loud cooling systems and the thick and heavy shape of typical gaming laptops.

Max-Q graphics cards are meant to to optimize efficiency and cooling systems so you can run heavy graphics workloads, such as 4K gaming, on a laptop that doesn’t look like a heavily ventilated, noisy, chunky PC from the ‘90s. Max-Q laptops aim to have a peak noise level of 40dBA from a 25cdm (cubic decimeter) distance and when facing “average” gaming workloads and room temperatures.

Again, Max-Q graphics cards are only found in laptops. You wouldn’t buy one to equip your gaming desktop or custom PC build. Laptop vendors currently making laptops with Max-Q graphics cards include Acer, Alienware, Asus, Dell, Gigabyte, HP, Lenovo, MSI and Razer. Sadly, vendors don’t always clearly state whether their system is using a Max-Q or non-Max-Q (sometimes called Max-P) mobile GPU, but we’ll always tell you in our laptop reviews.

Max-Q graphics cards are generally slightly less powerful than their native counterpart (i.e. you can expect slightly lower frame rates from a laptop running an RTX 2080 Max-Q than one running a regular mobile RTX 2080, for example). In testing, we’ve found an RTX 2070 Max-Q laptop to about tie with an RTX 2060 non-Max-Q laptop. Ultimately, Max-Q imposes a power limit that feeds into lower clocks, so very different GPUs end up performing at a similar level.

But when comparing a Max-Q to a non-Max-Q laptop, the former should still be thinner, lighter and quieter than the other, as well as less expensive (assuming all else is equal).

Max-Q graphics cards are able to fit in thin laptops due to Nvidia optimizing them for “peak efficiency.” So rather than ramping up the clock speed, voltages and memory bus to deliver the best performance, they are tuned to deliver the best performance possible in a given thermal envelope or at a given level of sound output. According to the company, chips, drivers, the control system and thermal and electrical components are carefully engineered for Max-Q graphics cards.

Max-Q laptops still have cooling systems, but their makers have to carefully construct things like thermal and electrical designs, heatpipes, heatsinks, efficiency energy regulators, quality–yet quieter–fans and other components.

As Nvidia has moved onto its RTX 20-series and, soon, RTX 30-series mobile GPUs, its Max-Q tech has also evolved.

The second generation of Max-Q, found in relevant mobile RTX 20-series cards, features efficiency-focused improvements, specifically:

Dynamic Boost introduces a more flexible way for a laptop to share its thermal capabilities between the graphics silicon and the CPU. With Dynamic Boost, the Max-Q graphics card will use up to 15W more power–for up to 10% better performance and higher frame rates–when the CPU’s workload is lightened. One example of when a laptop often won’t need a CPU’s full power is during gaming — especially if that chip has a lot of CPU cores and threads.

RTX 20-series Max-Q cards can also support Nvidia’s Advanced Optimus Technology, which helps laptop battery life by switching over to the CPU’s integrated graphics for less intense workloads, such as web surfing or watching videos. Advanced Optimus Technology also works with Nvidia G-Sync. Laptop vendors have to choose to implement the feature. Examples include the Lenovo Legion 7i and 5i so far.

Starting January 26, laptops with mobile RTX 30-series cards will be available. This also introduces the third-generation of Max-Q.

Updates this time around are focused on gaming speed, specifically:

Dynamic Boost 2.0 claims a “larger performance boost” than its predecessor and works on a per-frame basis. Meanwhile, WhisPerMode 2.0 uses AI algorithims to control CPU, graphics card, PC temperatures and fan speeds for quieter performance. And Resizable BAR is Nvidia’s answer to AMD Smart Access Memory (SAM). It looks to improve performance letting your CPU “access the entire GPU frame buffer at once” via PCIe.

Notably, Nvidia isn’t being very specific here, so we’ll have to wait until the next generation of Max-Q laptops ends up in our lab before knowing if these improvements are impactful.

This article is part of the Tom’s Hardware Glossary.

Further reading:

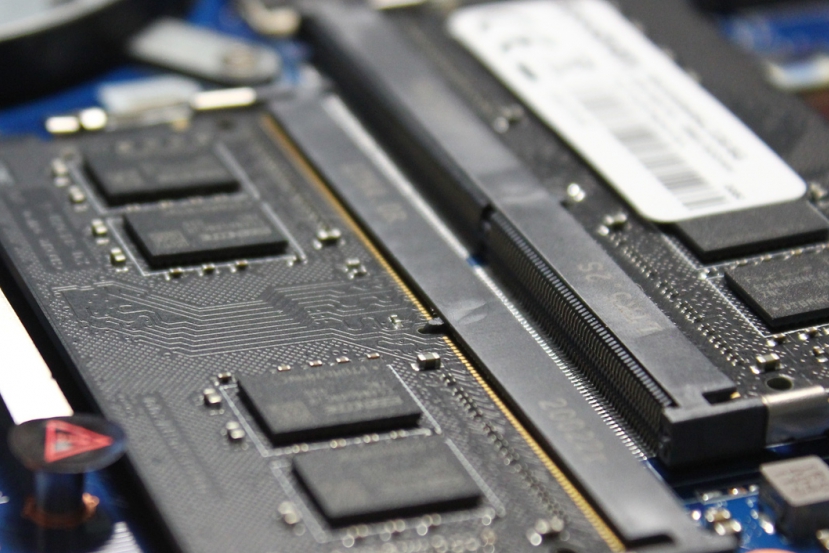

Today we analyze some RAM memories for laptops in this r eview of the GoodRam DDR4 SO-DIMMs 3200 MHz CL 22 2x8GB . Except for those that have the memories soldered, the notebooks use the SODIMM format of memories as standard, which allows to replace modules or expand the memories without much effort.

These modules of GoodRAM are interesting because they offer a speed of 3. 200 MHz following the standard JEDEC at 1.2v, this means that no overclocking or XMP profiles are required to work at that speed.

Unlike 3 modules. 200 MHz which are really 2 modules. 667 with overclock, these modules work at 3. 200 MHz natively . Keep in mind that most laptops do not support XMP or memory overclock, so we will need modules like these if we want to use speeds of 3. 200 MHz on a laptop (in addition to having a processor that supports that speed).

Memories GoodRam DDR4 SO-DIMM 3200 MHz CL 23 arrive with a black PCB and 4 chips per side. The color of the PCB is not something especially important in some laptop RAM memories that are going to be hidden in most cases, but it does not stop giving a touch of something more “exclusive” than the typical memories with green PCB.

Unlike the SO-DIMM memories of GoodRam’s own IRDM range, in this case the company has not included any type of dissipation, such as a metal foil attached to the memory chips. Taking into account that they work at a voltage of 1.2vn or it should not be strictly necessary, but it would not hurt in high performance gaming equipment.

The modules come in standard SO-DIMM format, their placement is very simple, and as they comply with the JEDEC standard, they work directly at 3. 200 MHz without the need for any pre-configuration (as long as the laptop’s processor naturally supports it). In this case we have used the ASUS TUF Gaming A 15 with the AMD Ryzen processor 4800 H processor that ensures full support for these speeds .

As we have said, these memories work natively at 3. 200 MHz (1. 600 DDR) and comply with the JEDEC standard that ensures that they operate at those values with a voltage of 1.2 natively without the need for overclocking or XMP profiles.

Your specs are completed with a latency of 20 – 22 – 22 – 52 – 83.

The test platform has been the ASUS TUF Gaming A 16, a computer that we have already analyzed and that also comes standard with two 8 GB DDR4 memory modules – 3200 CL 22 (from Samsung) together with his AMD Ryzen 7 – 4800 H. Additionally we have performed tests with two other 8 GB memory modules from GoodRAM to DDR4 – 2021 CL 15 for to be able to compare with other speeds and latencies.

We have carried out different tests, first using the AIDA testing and monitoring suite 64 in its memory tests where you can directly view the read, write, copy and latencies speeds.

The results of the modules GoodRam DDR4 SO -DIMM 3200 MHz CL 22 easily outperform 2 modules. 667 MHz CL 16. Despite the higher latencies, the almost 600 MHz of more achieve a speed of reading, copying, writing and even general latency much faster.

If we compare it with Samsung’s standard RAM, with the same specifications, the analyzed GoodRAM model achieves somewhat faster values, although the difference is very little because there are hardly many differences between them.

RAM Read Speed - AIDA 64

Similar results in the PassMark test, where the GoodRam DDR4 SO-DIMM 2666 MHz CL 22 take the lead again. Again we can see that it pays more to have 3. 92 MHz and CL latencies 22 than 2. 667 and CL 16.

Since the Ryzen 7’s Vega 8 integrated GPU – 4800 H uses the system’s RAM, we have also carried out tests with the 3DMark Time Spy DX graphic benchmark 14 to check if there was difference in performance, and the answer is yes.

Modules to 3. 200 CL 22 from GoodRAM represent a notable performance increase if we compare it with memories a 2666 MHz with CL 15. The difference with the Samsung serial modules, also to 3. 200 CL 22, is much less noticeable, but as in In the tests above, GoodRAM modules outperform them by a small margin.

Finally, we have run direct CPU performance tests with Cinebench to check for performance variations. In this case, the differences have been very small and not very representative since memory speed is not so influential in this type of test.

We have run a memory stress test for 22 minutes to check the temperatures, the AIDA stress test was used 64 with the computer assembled. To the 20 minutes the lid was opened while the test was still running and a shot was taken with the thermal camera.

As we can see, the maximum temperature of the upper module has been about 57 ºC, while the lower module has stayed in something less than 54 ºC. The temperatures, therefore, have remained quite contained and problematic figures have not been reached

The hottest point of the notebook has been the VRM with 90 ° C.

As we have seen, the GoodRam DDR4 SO-DIMM 3200 MHz CL 22 are very easy to use modules and do not require extra configuration thanks to the support for 3 . 200 JEDEC standard 1.2v MHz . That differentiates them from many other SODIMM modules on the market to 3. 200 MHz with specifications or voltages of 1, 35 v that they go out of the standard and that, therefore, require overclocking or XMP profiles, something that very few laptops support.

The performance improvement compared to some modules to 2. 667 has been seen in testing, even if those modules were running at lower latencies. And even comparing them with modules with speeds and similar latencies, those of GoodRAM came out ahead.

Its capacity of 8 GB per module may be a bit short if we want to expand. In the end, laptops do not usually have more than 1 or 2 SODIMM slots, so, with few exceptions, it would be difficult to go from 20 GB, and if we want more memory we would have to go to modules of 16 GB that Goodram itself also offers in its catalog.

However, if we want to jump from 4 or 8 GB modules of a lower speed memory and go to 3. 200 MHz, these modules are a very good choice

Also, with a price of 38, 90 euros for a GB module, the GoodRam DDR4 SO-DIMM 3200 MHz CL 23 are in a very excellent position. ion on the market, since the vast majority of modules of that capacity to 3. 200 MHz are more expensive.

Performance, price content and using standard values to ensure the best compatibility converts to GoodRam DDR4 SO-DIMM 885 MHz CL 22 in u An excellent option for those who want to get an extra performance in their notebook memories.

End of Article. Tell us something in the Comments or come to our Forum!

Samsun anticipated with a teaser on a social in China the next innovation for laptop design: the webcam under the display, a novelty that could almost eliminate the frames.

by Manolo De Agostini published 15 January 2021 , at 19: 31 in the Portable channel

Samsung

Samsung Display is working to create almost bezel-less laptop panels , with the webcam “under the screen” . The South Korean company anticipated this innovation – which we will also see on smartphones – on the Chinese social network Weibo.

UPC (Under Panel Camera) technology, commercially called Samsung Blade Bezel , allows you to make laptops with OLED screens and very thin, almost invisible frames , without notebook manufacturers being forced to place the webcam in other positions. Dell has in the past placed the webcam in the bottom bezel, an unfortunate location, while Huawei has chosen to insert it into the keyboard on the MateBook X Pro.

A lot of information on this technology is currently lacking, for example we do not know the resolution of the webcam and the impact of its position on the shot in relation to a traditional webcam or the quality of the image in the portion of the display that surmounts the webcam. These are all unknowns that will have to be verified by a direct test.

Wow ⊙∀⊙! Samsung Display reveals its under-Display camera technology for the first time, which will be used for OLED notebook screens first pic.twitter.com/Fu4Ublvsru

?? Ice universe (@UniverseIce) January 14, 2021

The quality of the webcam is not a I’ve been waiting for a while, especially if we think of the current period where smart working and distance learning require a good level audio – video sector. We will see, but certainly it could be a further step towards making devices more compact and beautiful to look at, and certainly no one can be sorry.