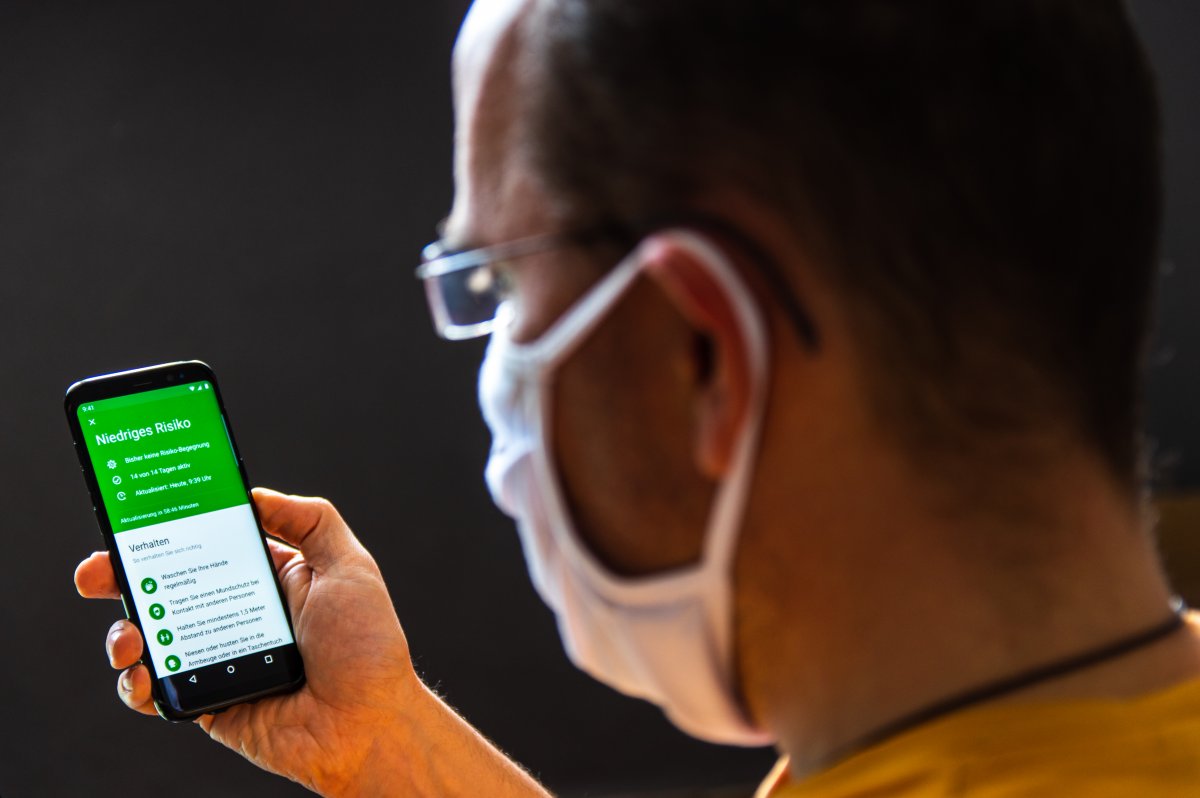

Not only researchers find that the success of the corona warning app depends crucially on its acceptance. Increased acceptance was also one of the reasons why the decision was made early on for a decentralized solution – which should also be developed for more transparency, open source. Whatever happens: The source code can be viewed on Github.

Without Google Services All the source code? Nearly. There is still a small patch … The so-called “Exposure API” from Google (or Apple) is accessed for functionality. And their code is not open source. The app itself can currently only be obtained from the respective “official stores” of Google and Apple. Multiple requests to download the APK file for Android e.g. B. to make it available on Github, were rejected mainly for two reasons:

The app would work anyway without pre-installed Google Services do not work (which has not been true since the beginning of September, since microG now also maps the Exposure API) The RKI would not have been approved for this granted On the one hand, the RKI emphasizes that everyone should install this app – please On the other hand, however, they are not available to everyone: Users of newer Huawei devices in particular continue to look into the tube. Likewise, those who forego the Google connection (including the Google account required to use the Play Store) to protect their privacy.

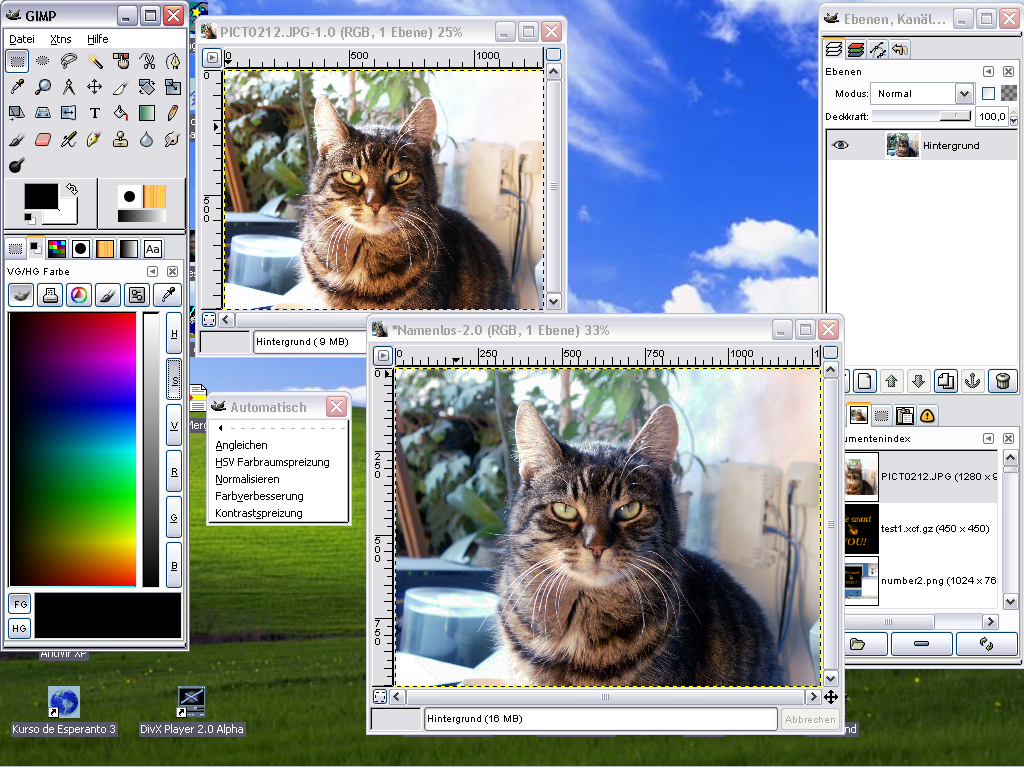

In a few days at F-Droid So Marvin Wißfeld, the author of the microG framework, sat down again – and in a few days Weeks single-handedly achieved what the large companies SAP and DTAG apparently did not succeed in spite of government subsidies: He also recreated the client libraries (i.e. the non-free Google components previously required in the app itself to address the Exposure API) as open source . And made available under a free license, of course. In such a way that, as a so-called “drop-in replacement”, they can replace the proprietary Google libraries with just a few steps.

The F-Droid community immediately took this as an opportunity to do the whole thing in a fork of the app – and just a few hours later a functional app is created that is completely open source. This is currently still being tested internally – but should be available at F-Droid in a few days. And of course SAP / DTAG is also free to convert to these open source libraries. The corresponding offer has already been made to them. But probably the RKI has to agree again.

(bme)