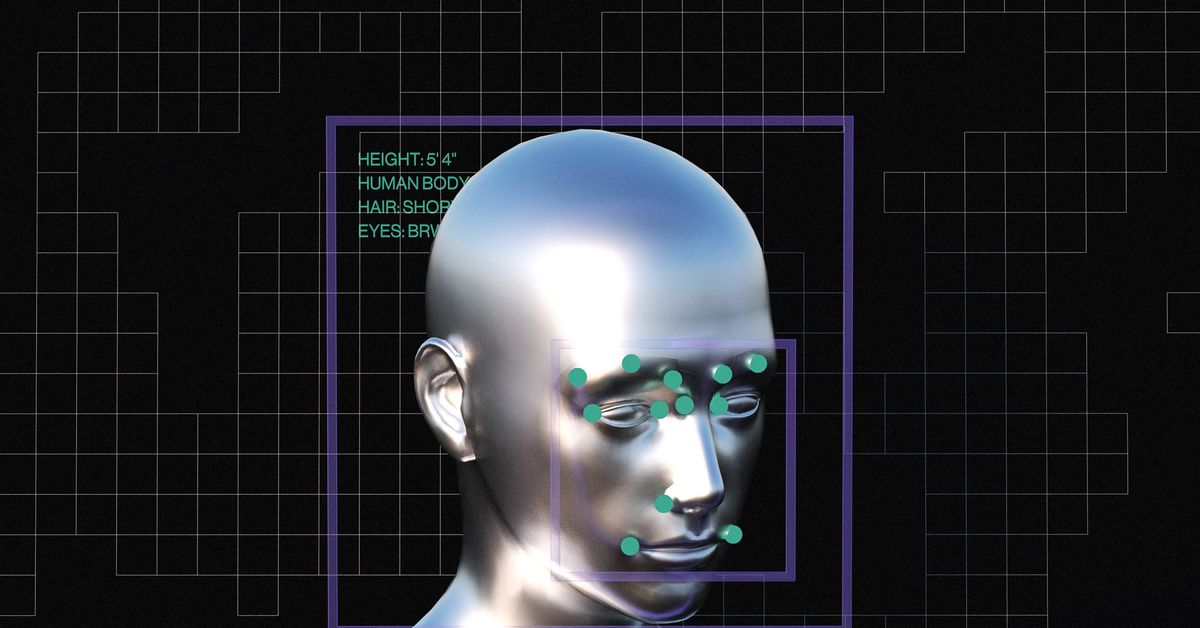

Is there any way out of Clearview’s facial recognition database?

Source: The Verge added 09th Jun 2021In March 2020, two months after The New York Times exposed that Clearview AI had scraped billions of images from the internet to create a facial recognition database, Thomas Smith received a dossier encompassing most of his digital life.

Using the recently enacted California Consumer Privacy Act, Smith asked Clearview for what they had on him. The company sent him pictures that spanned moments throughout his adult life: a photo from when he got married and started a blog with his wife, another when he was profiled by his college’s alumni magazine, even a profile photo from a Python coding meetup he had attended a few years ago.

“That’s what really threw me: All the things that I had posted to Facebook and figured, ‘Nobody’s going to ever look for that,’ and here it is all laid out in a database,” Smith told The Verge.

Clearview’s massive surveillance apparatus claims to hold 3 billion photos, accessible to any law enforcement agency with a subscription, and it’s likely you or people you know have been scooped up in the company’s dragnet. It’s known to have scraped sites like Facebook, LinkedIn, YouTube, and Instagram, and is able to use profile names and associated images to build a trove of identified and scannable facial images.

Little is known about the accuracy of Clearview’s software, but it appears to be powered by a massive trove of scraped and identified images, drawn from social media profiles and other personal photos on the public internet. That scraping is only possible because social media platforms like Facebook have consolidated immense amounts of personal data on their platforms, and then largely ignored the risks of large-scale data analysis projects like Clearview. It took Facebook until 2018 and the Cambridge Analytica scandal to lock down developer tools that could be used to exploit its users’ data. Even after the extent of Clearview’s scraping came to light, Facebook and other tech platforms’ reactions came largely in the form of strongly worded letters asking Clearview to stop scraping their sites.

But with large platforms unable or unwilling to go further, the average person on the internet is left with a difficult choice. Any new pictures that feature you, whether a simple Instagram shot or a photo tagged on a friend’s Facebook page, are potentially grist for the mill of a globe-spanning facial recognition system. But for many people, hiding our faces from the internet doesn’t feel like an option. These platforms are too deeply embedded in public life, and our faces are too central to who we are. The challenge is finding a way to share photos without submitting to the broader scanning systems — and it’s a challenge with no clear answers.

In some ways, this problem is much older than Clearview AI. The internet was built to facilitate the posting of public information, and social media platforms entrenched this idea; Facebook recruited a billion users between 2009 and 2014, when posting publicly on the internet was its default setting. Others like YouTube, Twitter, and LinkedIn encourage public posting as a way for users to gain influence, contribute to global conversations, and find work.

Historically, one person’s contribution to this unfathomable amount of graduation pics, vacation group shots, and selfies would have meant safety in numbers. You might see a security camera in a convenience store, but it’s unlikely anyone is actually watching the footage. But this kind of thinking is what Clearview thrives on, as automated facial recognition can now pick through this digital glut on the scale of the entire public internet.

“Even when the world involved a lot of surveillance cameras, there wasn’t a great way to analyze the data,” said Catherine Crump, professor at UC Berkeley’s School of Law. “Facial recognition technology and analytics generally have been so revolutionary because they’ve put an end to privacy by obscurity, or it seems they may soon do that.”

This means that you can’t rely on blending in with the crowd. The only way to stop Clearview from gathering your data is by not allowing it on the public internet in the first place. Facebook makes certain information public, without the option to make it private, like your profile picture and cover photo. Private accounts on Instagram also cannot hide profile pictures. If you’re worried about information being scraped from your Facebook or Instagram account, these are the first images to change. LinkedIn, on the other hand, allows you to limit the visibility of your profile picture to only people you’ve connected with.

Outside of Clearview, facial recognition search engines like PimEyes have become popular tools accessible to anyone on the internet, and other enterprise facial recognition apps like FindFace work with oppressive governments across the world.

Another key component to ensuring the privacy of those around you is to make sure you’re not posting pictures of others without consent. Smith, who requested his data from Clearview, was surprised at how many others had been scooped up in the database by just appearing in photos with him, like his friends and his college adviser.

But since some images on the internet, like those on Facebook and Instagram, simply cannot be hidden, some AI researchers are exploring ways to “cloak” images to evade Clearview’s technology, as well as any other facial recognition technology trawling the open web.

In August 2020, a project called Fawkes released by the University of Chicago’s SAND Lab pitched itself as a potential antidote to Clearview’s pervasive scraping. The software works by subtly altering the parts of an image that facial recognition uses to discern one person from another, while trying to preserve how the image looks to humans. This exploit on an AI system is called an “adversarial attack.”

Fawkes highlights the difficulty of designing technology that tries to hide images or limit the accuracy of facial recognition. Clearview draws on hundreds of millions of identities, so while individual users might be able to get some benefit from using the Windows and Mac app developed by the Fawkes team, the database won’t meaningfully suffer from a few hundred thousand fewer profiles.

Ben Zhao, the University of Chicago professor who oversees the Fawkes project, says that Fawkes works only if people are diligent about cloaking all of their images. It’s a big ask, since users would have to juggle multiple versions of every photo they share.

On the other hand, a social media platform like Facebook could tackle the scale of Clearview by integrating a feature like Fawkes into its photo uploading process, though that would simply shift which company has access to your unadulterated images. Users would then have to trust Facebook to not use that access to now-proprietary data for their own ad targeting or other tracking.

Zhao and other privacy experts agree that adversarial tricks like Fawkes aren’t a silver bullet that will be used to defeat coordinated scraping campaigns, even those for facial recognition databases. Evading Clearview will take more than just one technical fix or privacy checkup nudge on Facebook. Instead, platforms will need to rethink how data is uploaded and maintained online, and which data can be publicly accessed at all. This would mean fewer public photos and fewer opportunities for Clearview to add new identities to its database.

Jennifer King, privacy and data policy fellow at Stanford’s Institute for Human-Centered Artificial Intelligence, says one approach is for data to be automatically deleted after a certain amount of time. Part of what makes services like Snapchat more private (when set up properly) than Facebook or Instagram is its dedication to short-lived media posted mainly to small, trusted groups of people.

Laws in some states and countries are also starting to catch up with privacy threats online. These laws circumvent platforms like Facebook and instead demand accountability from the companies actually scraping the data. The California Consumer Privacy Act allows residents to ask for a copy of the data that companies like Clearview have on them, and similar provisions exist in the European Union. Some laws mandate that the data must be deleted at the user’s request.

But King notes that just because the data is deleted once doesn’t mean the company can’t simply grab it again.

“It’s not a permanent opt-out,” she said. “I’m concerned that you execute that ‘delete my data’ request on May 31st, and on June 1st, they can go back to collecting your data.”

So if you’re going to lock down your online presence, make sure to change your privacy settings and remove as many images as possible before asking companies to delete your data.

But ultimately, to prevent bad actors like Clearview from obtaining data in the first place, users are at the mercy of social media platforms’ policies. After all, it’s the current state of privacy settings that has allowed a company like Clearview to exist at all.

“There’s a lot you can do to safeguard your data or claw it back, but ultimately, for there to be change here, it needs to happen collectively, through legislation, through litigation, and through people coming together and deciding what privacy should look like,” Smith said. “Even people coming together and saying to Facebook, ‘I need you to protect my data more.’”

brands: ACT Built Bullet First It Key King New One other Profile Python simple Simply Team Thomas Trust WAS Windows media: 'The Verge' keywords: App Facebook Instagram Internet Snapchat Software Windows YouTube

Related posts

Notice: Undefined variable: all_related in /var/www/vhosts/rondea.com/httpdocs/wp-content/themes/rondea-2-0/single-article.php on line 88

Notice: Undefined variable: all_related in /var/www/vhosts/rondea.com/httpdocs/wp-content/themes/rondea-2-0/single-article.php on line 88

Related Products

Notice: Undefined variable: all_related in /var/www/vhosts/rondea.com/httpdocs/wp-content/themes/rondea-2-0/single-article.php on line 91

Warning: Invalid argument supplied for foreach() in /var/www/vhosts/rondea.com/httpdocs/wp-content/themes/rondea-2-0/single-article.php on line 91