Nvidia’s flagship A100 compute GPU introduced last year delivers leading-edge performance required by cloud datacenters and supercomputers, but the unit is way too powerful and expensive for more down-to-earth workloads. So today at GTC the company introduced two younger brothers for its flagship, the A30 for mainstream AI and analytics servers, and the A10 for mixed compute and graphics workloads.

Comparison of Nvidia’s A100-Series Datacenter GPUs

| A100 for PCIe | A30 | A10 | |

| FP64 | 9.7 TFLOPS | 5.2 TFLOPS | – |

| FP64 Tensor Core | 19.5 TFLOPS | 10.3 TFLOPS | – |

| FP32 | 19.5 TFLOPS | 10.3 TFLOPS | 31.2 TFLOPS |

| TF32 | 156 TF | 82 TF | 62.5 TFLOPS |

| Bfloat16 | 312 TF | 165 TF | 125 TF |

| FP16 Tensor Core | 312 TF | 165 TF | 125 TF |

| INT8 | 624 TOPS | 330 TOPS | 250 TOPS |

| INT4 | 1248 TOPS | 661 TOPS | 500 TOPS |

| RT Cores | – | – | 72 |

| Memory | 40 GB HBM2 | 24 GB HBM2 | 24 GB GDDR6 |

| Memory Bandwidth | 1,555 GB/s | 933 GB/s | 600 GB/s |

| Interconnect | 12 NVLinks, 600 GB/s | ? NVLinks, 200 GB/s | – |

| Multi-Instance | 7 MIGs @ 5 GB | 4 MIGs @ 6 GB | – |

| Optical Flow Acceleration | – | 1 | – |

| NVJPEG | – | 1 decoder | ? |

| NVENC | – | ? | 1 encoder |

| NVDEC | – | 4 decoders | 1 decoder (+AV1) |

| Form-Factor | FHFL | FHFL | FHFL |

| TDP | 250W | 165W | 150W |

The Nvidia A30: A Mainstream Compute GPU for AI Inference

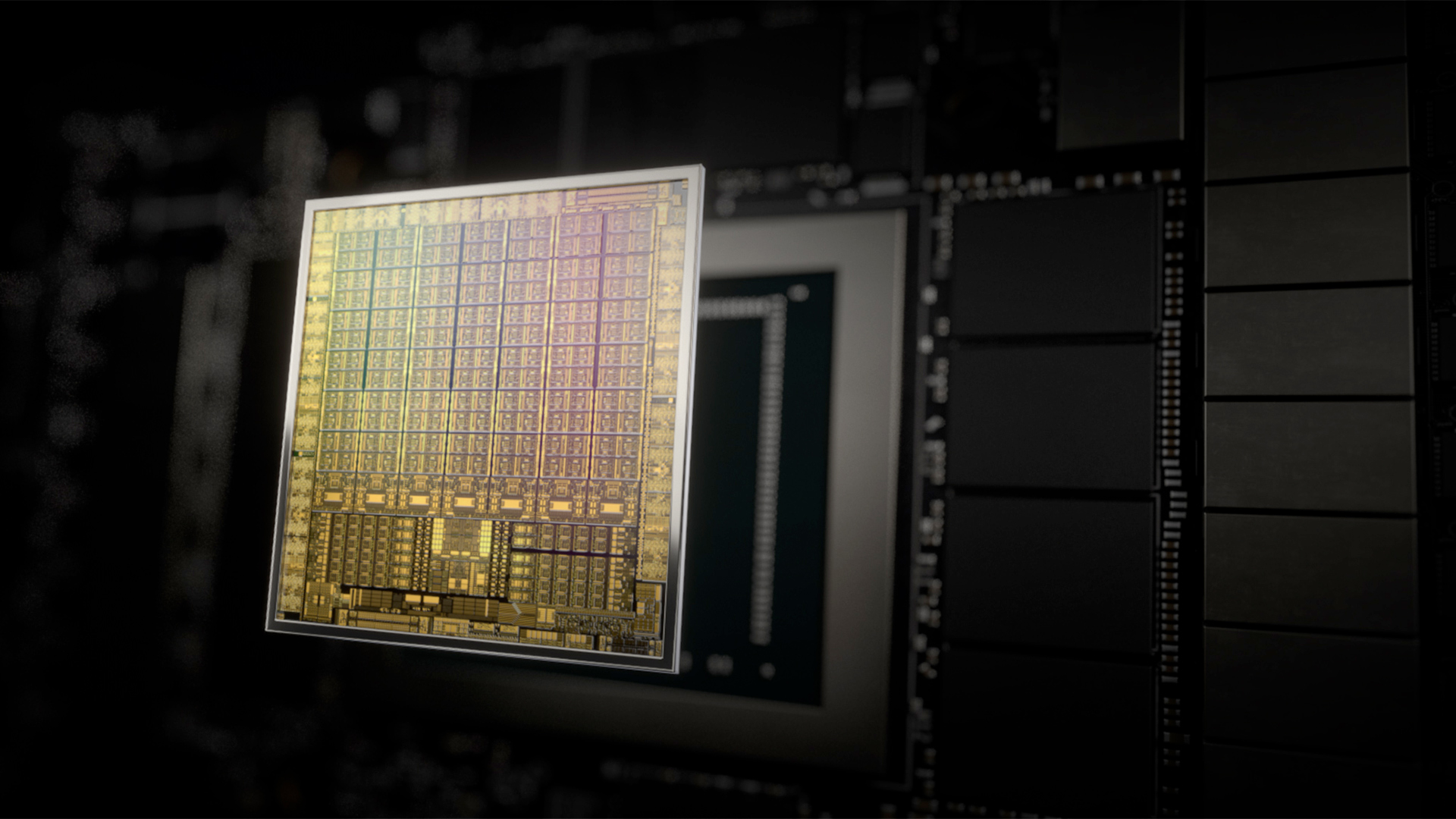

Nvidia’s A30 compute GPU is indeed A100’s little brother and is based on the same compute-oriented Ampere architecture. It supports the same features, a broad range of math precisions for AI as well as HPC workloads (FP64, FP64TF, FP32, TF32, bfloat16, FP16, INT8, INT4), and even multi-instance GPU (MIG) capability with 6GB instances. From a performance point of view, the A30 GPU offers slightly more than 50% of A100’s performance, so we are talking about 10.3 FP32 TFLOPS, 5.2 FP64 TFLOPS, and 165 FP16/bfloat16 TFLOPS.

When it comes to memory, the unit is equipped with 24GB of DRAM featuring a 933GB/s bandwidth (we suspect Nvidia uses three HBM2 stacks at around 2.4 GT/s, but the company has not confirmed this). The memory subsystem seems to lack ECC support, which might be a limitation for those who need to work with large datasets. Effectively, Nvidia wants these customers to use its more expensive A100.

Nvidia traditionally does not disclose precise specifications of its compute GPU products at launch, yet we suspect that the A30 is exactly ‘half’ of the A100 with 3456 CUDA cores, though this is something that is unconfirmed at this point.

Nvidia’s A30 comes in a dual-slot full-height, full length (FHFL) form-factor, with a PCIe 4.0 x16 interface and a 165W TDP, down from 250W in case of the FHFL A100. Meanwhile, the A30 supports one NVLink at 200 GB/s (down from 600 GB/s in case of the A100).

The Nvidia A10: A GPU for AI, Graphics, and Video

Nvidia’s A10 does not derive from compute-oriented A100 and A30, but is an entirely different product that can be used for graphics, AI inference, and video encoding/decoding workloads. The A10 supports FP32, TF32, blfoat16, FP16, INT8 and INT4 formats for graphics and AI, but does not support FP64 required for HPC.

The A10 is a single-slot FHFL graphics card with a PCIe 4.0 x16 interface that will be installed into servers running the Nvidia RTX Virtual Workstation (vWS) software and remotely powering workstations that need both AI and graphics capabilities. To a large degree, the A10 is expected to be a remote workhorse for artists, designers, engineers, and scientists (who do not need FP64).

Nvidia’s A10 seems to be based on the GA102 silicon (or its derivative), but since it supports INT8 and INT4 precisions, we cannot be 100% sure that this is physically the same processor that powers Nvidia’s GeForce RTX 3080/3090 and RTX A6000 cards. Meanwhile, performance of the A10 (31.2 FP32 TFLOPS, 125 FP16 TFLOPS) sits in the range of the GeForce RTX 3080. The card comes equipped with 24GB of GDDR6 memory offering a 600GB/s bandwidth, which appears to be the memory interface width of the RTX 3090 but without the GDDR6X clock speeds (or power or temperatures).

Pricing and Availability

Nvidia expects its partners to start offering machines with its A30 and A10 GPUs later this year.